Product:

Planning Analytics 2.0.5 workspace

Microsoft Server 2016

Problem:

You get value in to the TM1 application, from a yearly cost of company car, and you want to know what the monthly cost is. Where you have the values for start date, and the accumulated sum for the month.

Starting solution:

This show part of the solution, to get you to understand the ParseDate function.

You must expand this solution to get a fully working TI process.

You can a find better solutions here:

http://www.tm1forum.com/viewtopic.php?t=9736

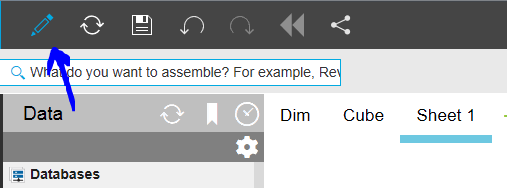

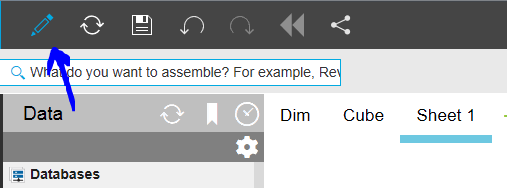

Login to PAW (Plannig Analytics Workspace) and your application.

Click on the pencil to make it blue so you are in edit mode.

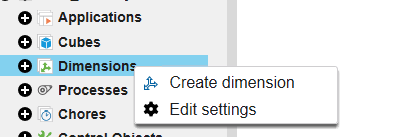

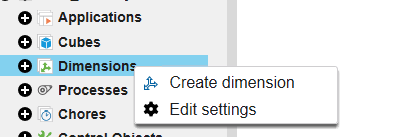

Right click on dimensions to create a new dimension

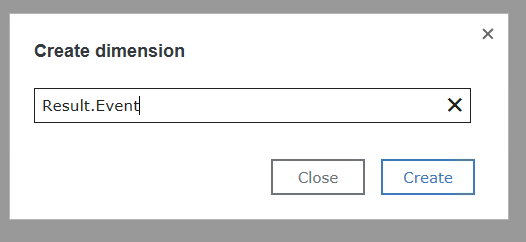

Enter the name of the dimension and click create

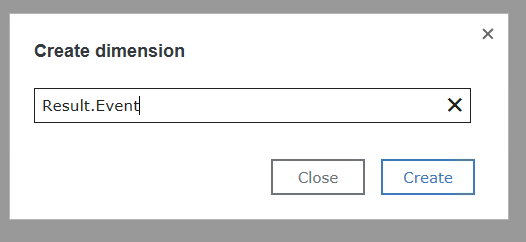

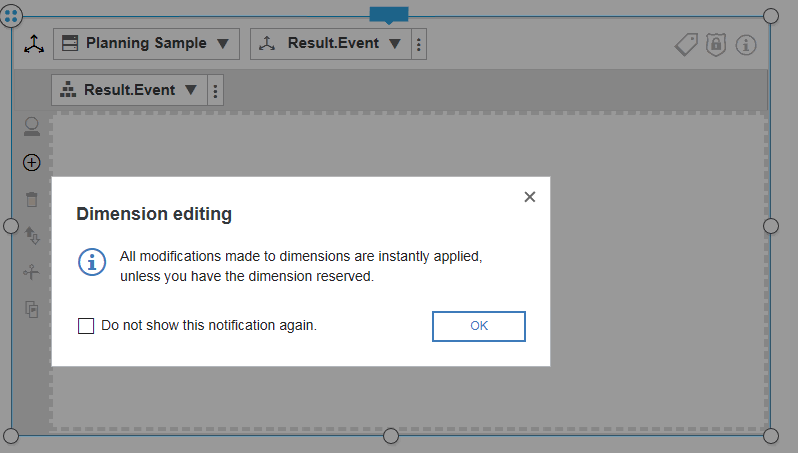

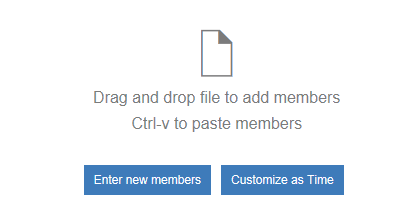

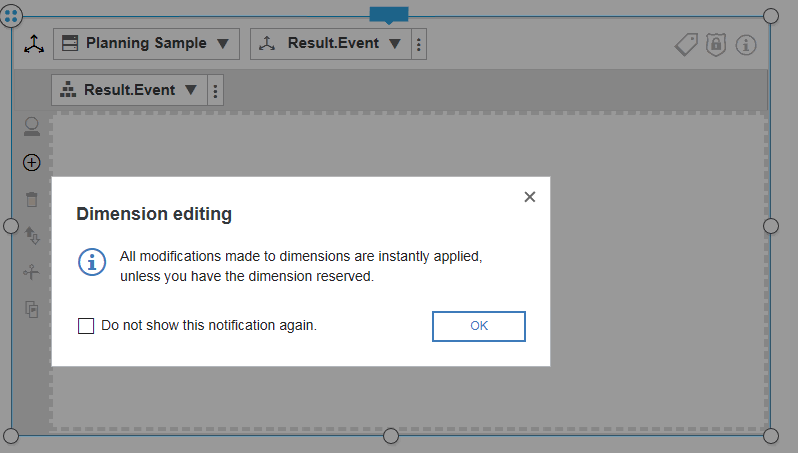

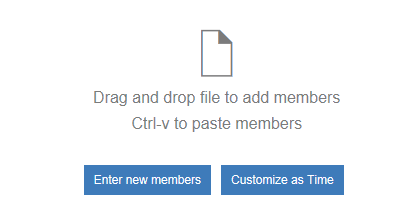

Click OK and click on enter new members

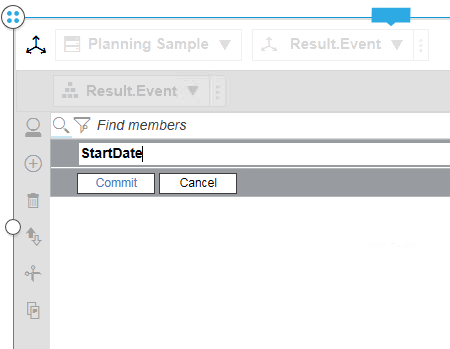

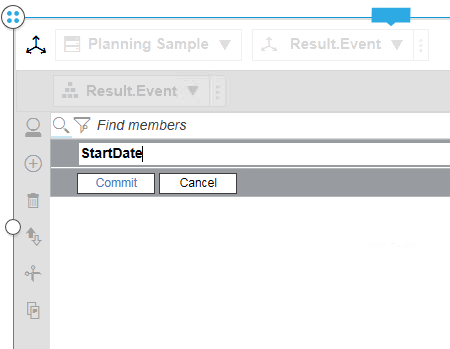

Enter StartDate and click on commit

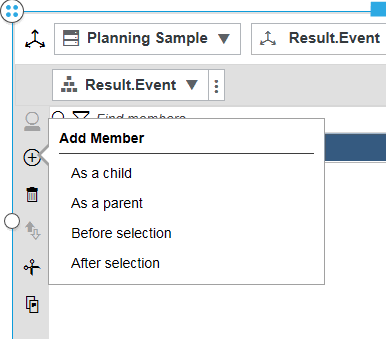

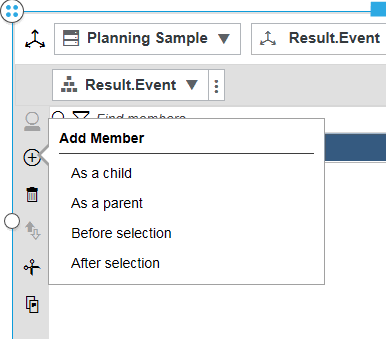

click on plus sign and select after selection to add a new member of the dimension.

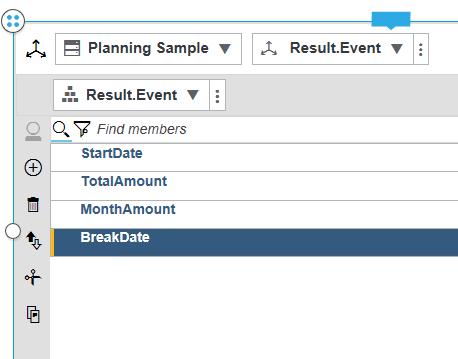

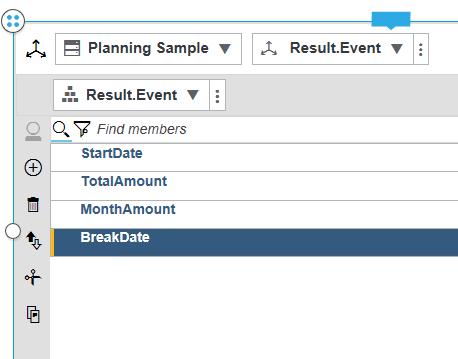

Enter this values – this will be part of our cube to show the result.

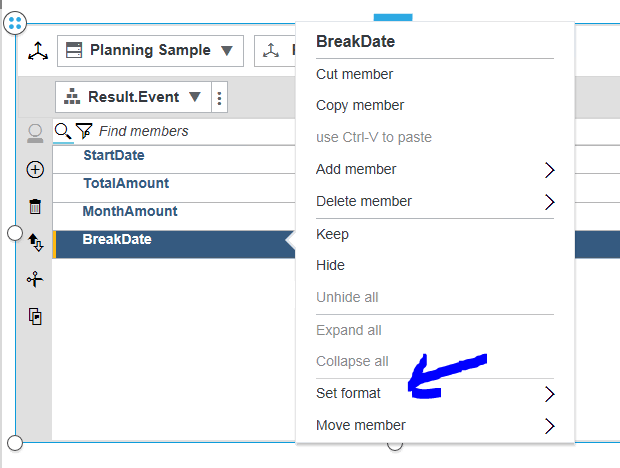

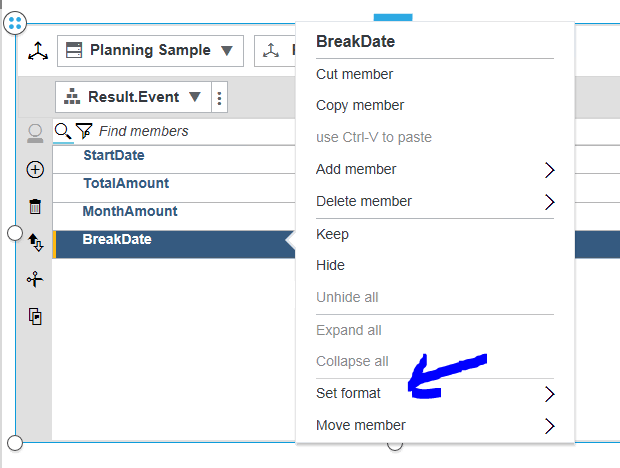

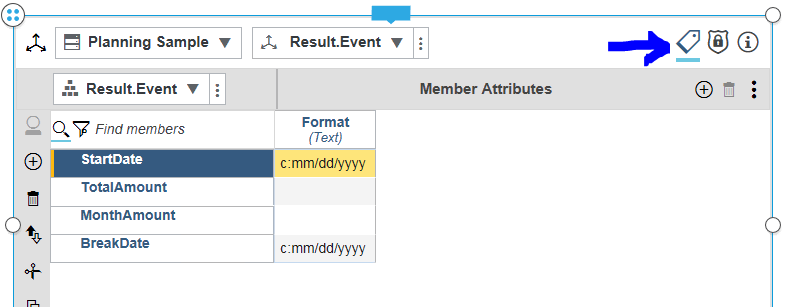

Select the Breakdate and right click to set format to Date, repeat for StartDate.

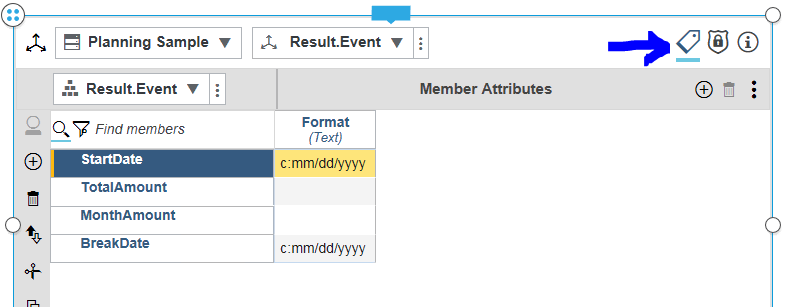

Click on the show members attributes to see the format.

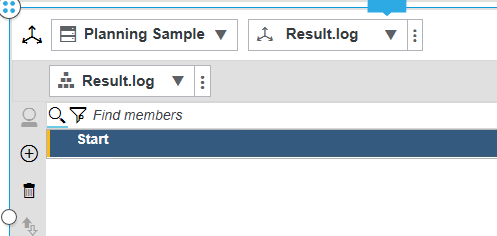

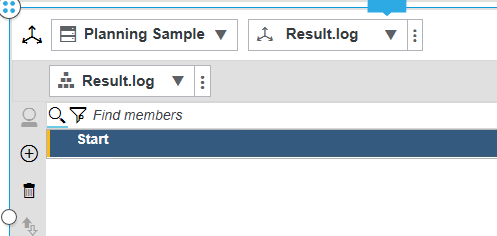

Create a new dimension named Result.Log with only one member.

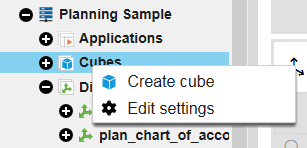

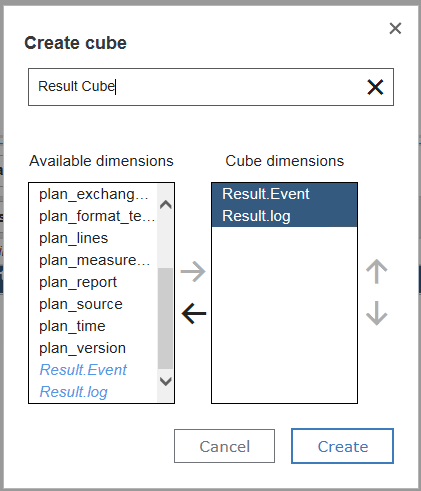

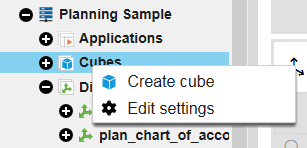

Right click on cubes to create a new cube of above dimensions.

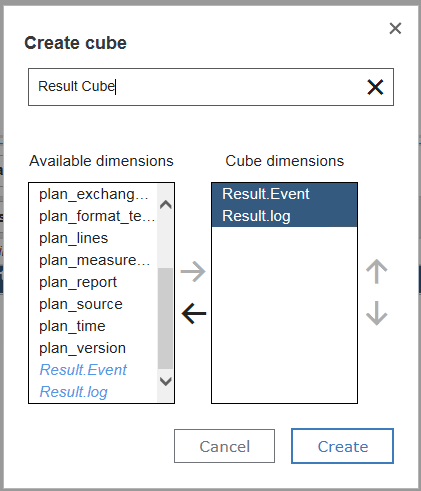

Mark Result.Event and Result.log and click arrow to move them over.

Enter Result Cube as name of the cube, and click on Create.

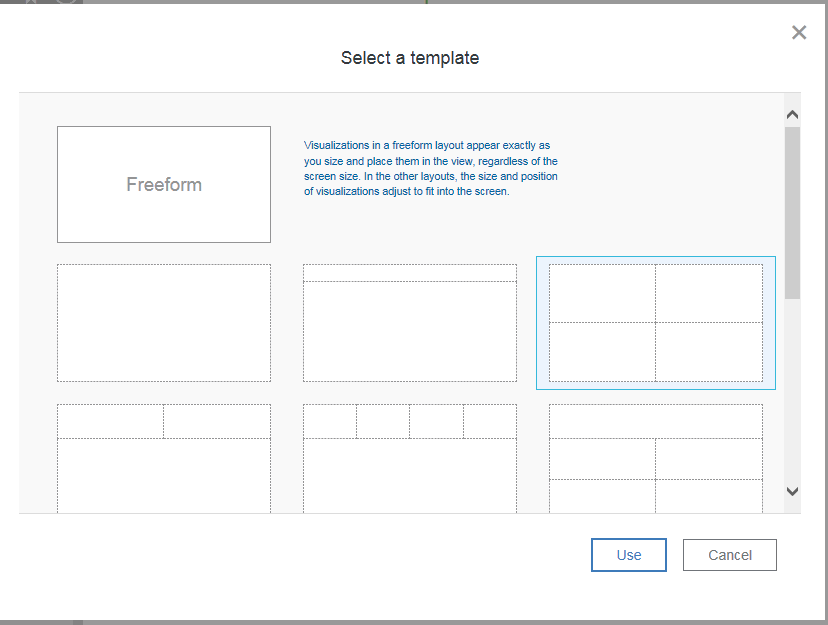

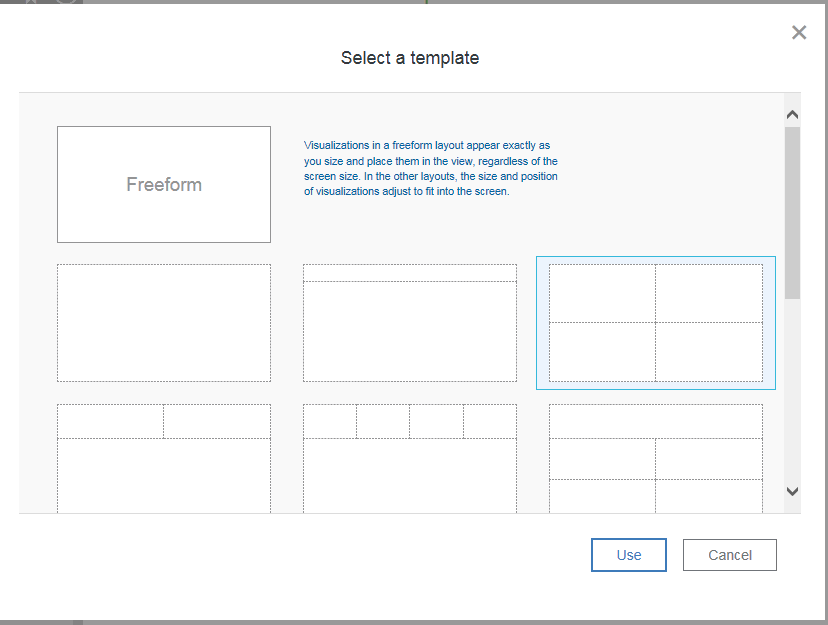

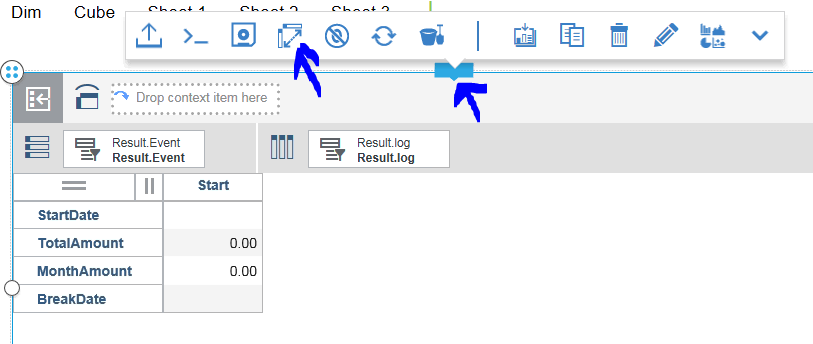

Click on the plus sign to add a new sheet. Select a boxed template.

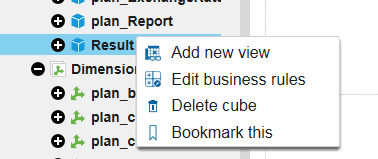

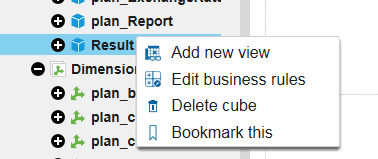

Select the cube and right click and select Add new view

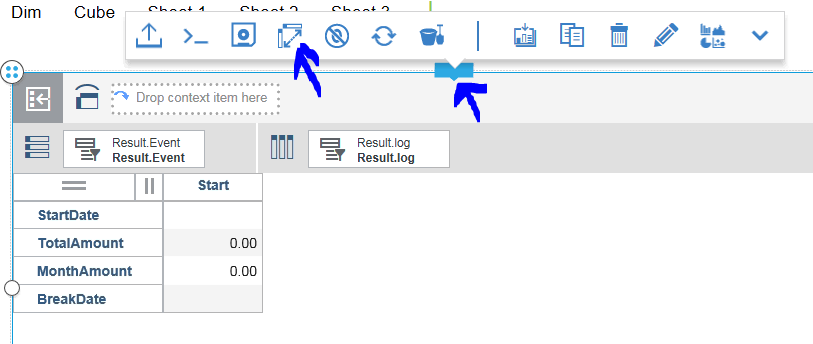

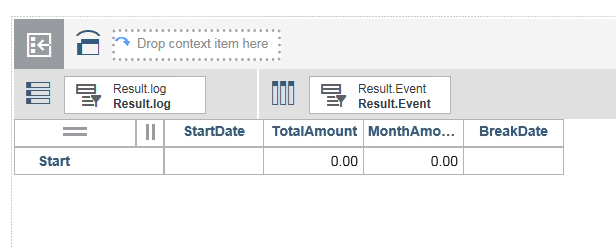

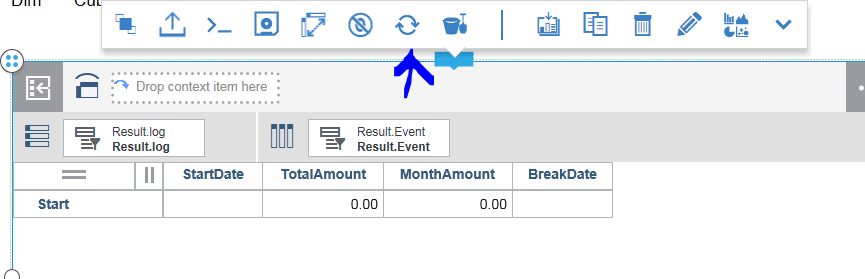

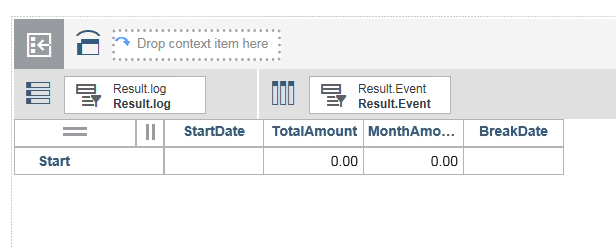

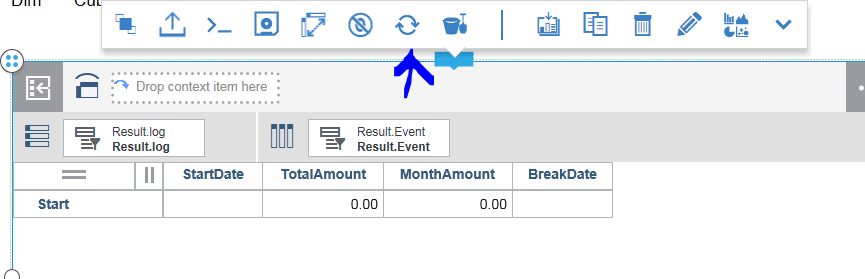

Click on the box and on the icon to change row and columns value. So you get this;

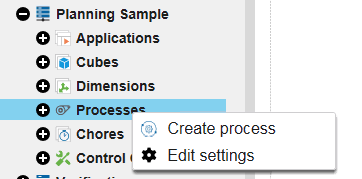

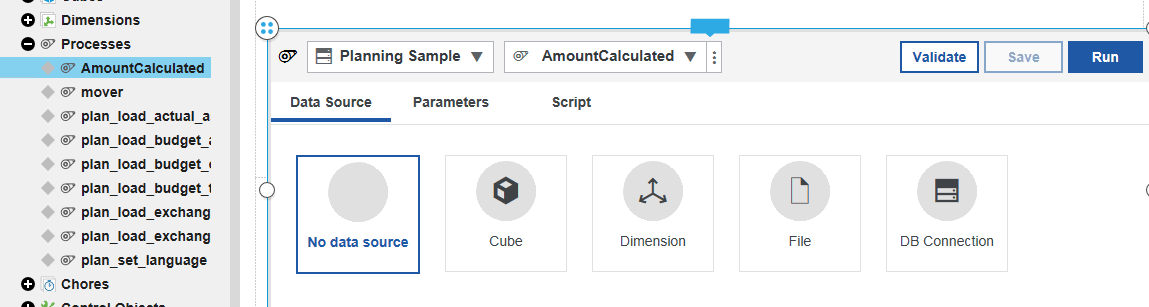

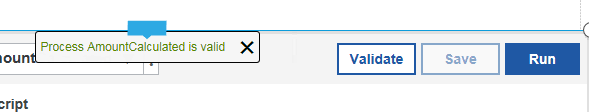

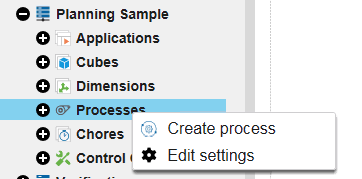

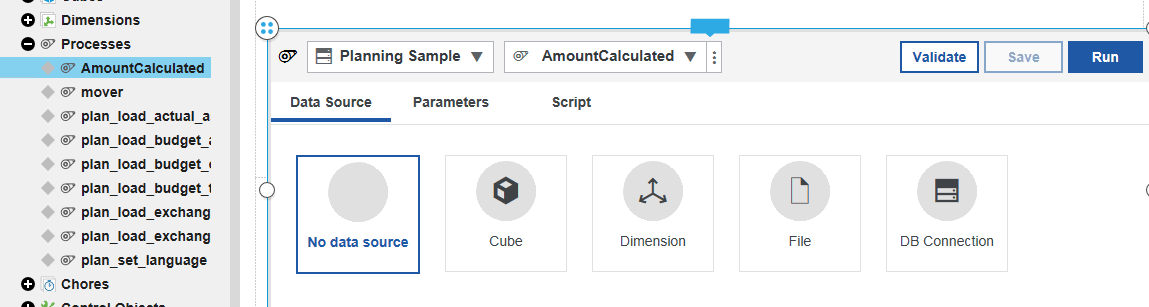

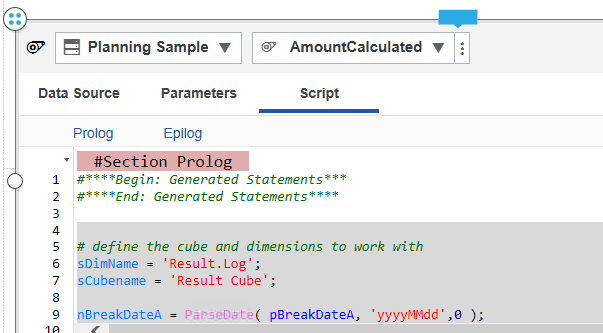

Right click on process to create a new process. Name the process AmountCalculated.

Expand the code window so you see most of it below the cube view.

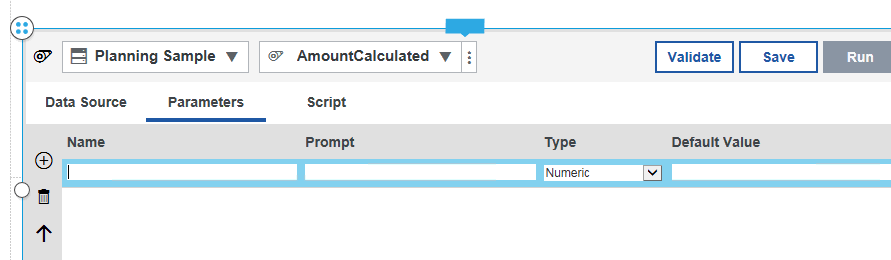

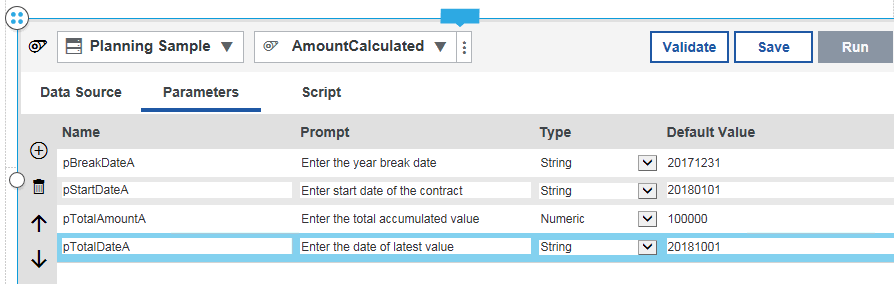

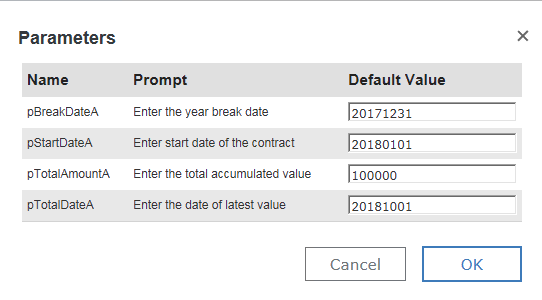

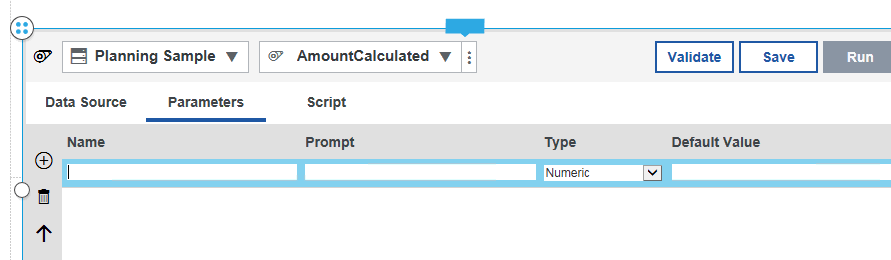

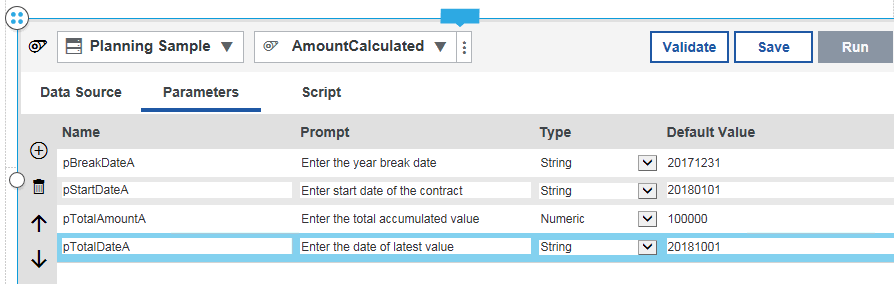

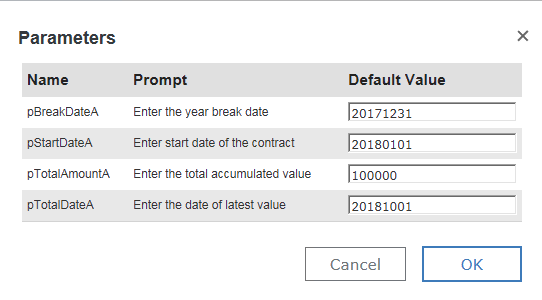

Click on parameters tab, and click on plus sign to add input parameters. Here we will enter the values to test the process. In your solution you may get this values from a file instead.

Enter above names and start values. (p is to show it is a parameter variable)

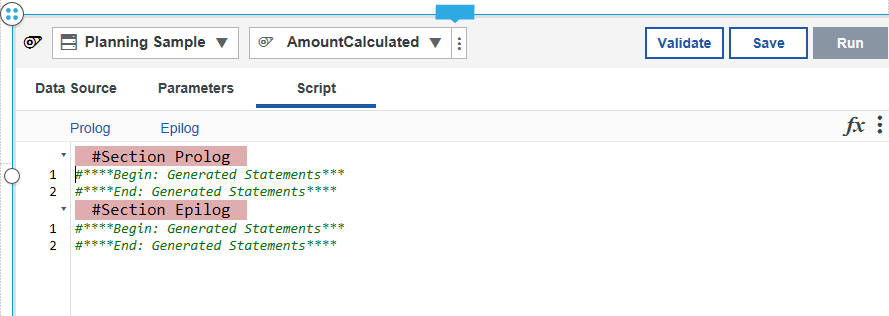

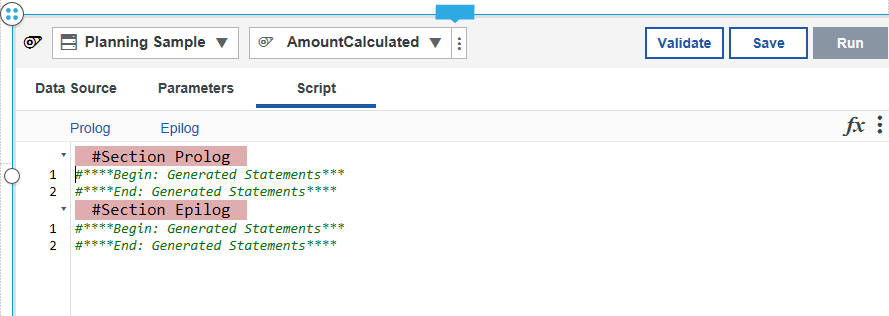

Enter script tab, to enter your code.

Enter below code under Prolog End: Generated Statements

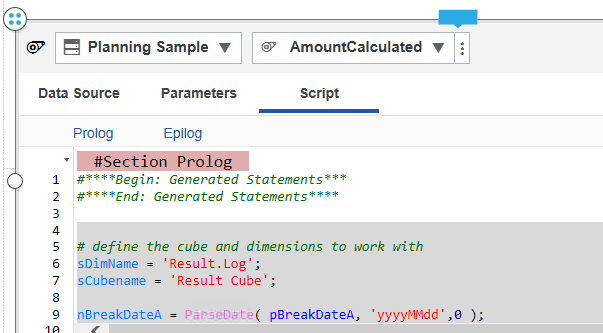

# define the cube and dimensions to work with

sDimName = ‘Result.Log’;

sCubename = ‘Result Cube’;

nBreakDateA = ParseDate( pBreakDateA, ‘yyyyMMdd’,0 );

nStartDateA = ParseDate( pStartDateA, ‘yyyyMMdd’,0 );

# write variables values to txt file in servers log folder for debug

asciioutput (‘../logfiles/debuglog1.txt’, str(nBreakDateA,8,2),str(nStartDateA,8,2) , numbertostring(pTotalAmountA));

# check if it is before break date then divide with 12

if (nStartDateA < nBreakDateA);

nMonthAmountA = pTotalAmountA / 12;

else;

# check if the distance is not in the same year

nStartYear = stringtonumber( subst (pStartDateA, 1,4));

nEndYear = stringtonumber( subst (pTotalDateA, 1,4));

# write variables values to txt file in servers log folder for debug

asciioutput (‘../logfiles/debuglog3.txt’, numbertostring(nStartYear),numbertostring(nEndYear) , pStartDateA, pTotalDateA );

if (nStartYear <> nEndYear);

nTotalDateA = ParseDate( pTotalDateA, ‘yyyyMMdd’,0 );

nResult= ((YEAR(DATE( nTotalDateA )) – YEAR(DATE( ( nStartDateA ))) )*12)+ (MONTH(DATE( nTotalDateA )) – MONTH(DATE( ( nStartDateA ))) ) ;

# write variables values to txt file in servers log folder for debug

asciioutput (‘../logfiles/debuglog4.txt’, numbertostring(nStartYear), numbertostring(nEndYear) , numbertostring(nResult) );

# if total is far from start date to today – you use the same formula but nResult is total number of months

nMonthAmountA = pTotalAmountA / nResult;

else;

# change here what values you want to use for the calculation

nStartmonth = stringtonumber( subst (pStartdateA, 5,2));

nEndmonth = stringtonumber( subst (pTotaldateA, 5,2));

nOfMonths = nEndmonth – nStartmonth +1;

# calculate value if less than a year

nMonthAmountA = pTotalAmountA / nOfMonths;

endif;

endif;

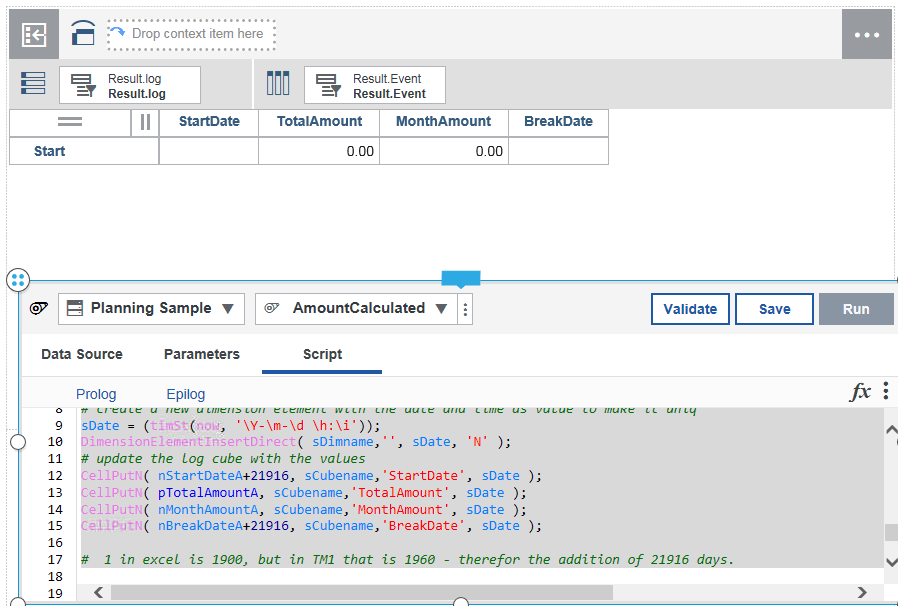

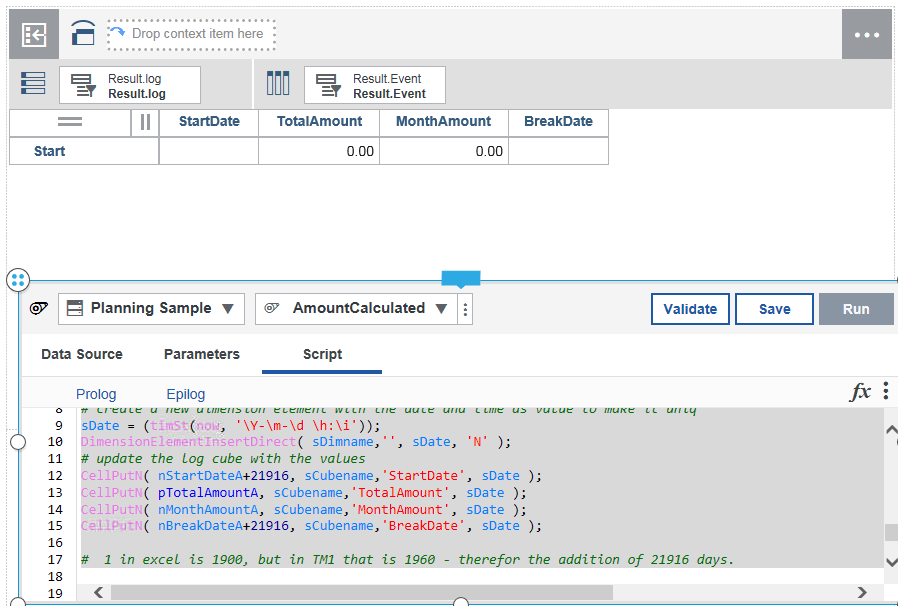

Then enter below code as Epilog

# write variables values to txt file in servers log folder for debug

asciioutput (‘../logfiles/debuglog2.txt’, str(nStartmonth,8,2) ,numbertostring(nMonthAmountA) ,numbertostring(pTotalAmountA), numbertostring(nOfMonths));

# create a new dimension element with the date and time as value to make it uniq

sDate = (timSt(now, ‘\Y-\m-\d \h:\i’));

DimensionElementInsertDirect( sDimname,’ ‘, sDate, ‘N’ );

# update the log cube with the values

CellPutN( nStartDateA+21916, sCubename,’StartDate’, sDate );

CellPutN( pTotalAmountA, sCubename,’TotalAmount’, sDate );

CellPutN( nMonthAmountA, sCubename,’MonthAmount’, sDate );

CellPutN( nBreakDateA+21916, sCubename,’BreakDate’, sDate );

# 1 in excel is 1900, but in TM1 that is 1960 – therefor the addition of 21916 days.

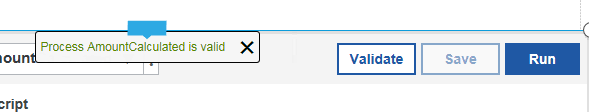

Click validate, and correct any ‘ that may be of wrong format.

Save and run.

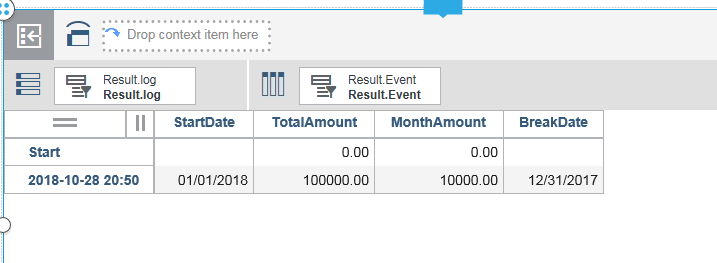

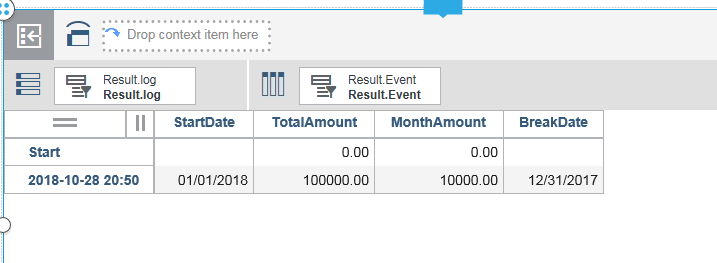

Enter your values and click OK.

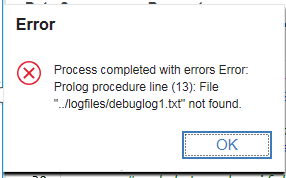

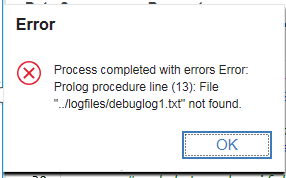

Above error if there is no /logfiles/ folder parallel to the data folder.

Create on folder c:\Program Files\ibm\cognos\tm1_64\samples\tm1\logfiles, if you use a sample TM1 application that do not have a separate log folder.

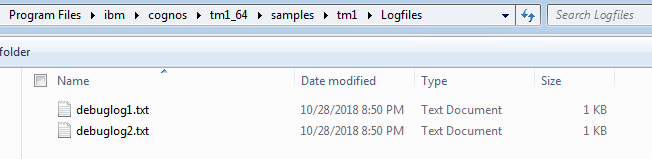

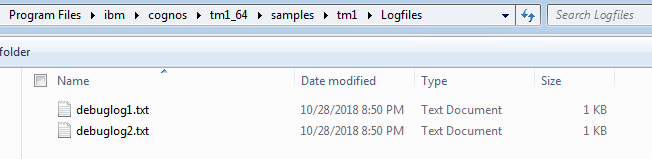

Connect to the server and check the log files to see variables values.

Click on refresh for the view to see the result.

Things to review:

nBreakDateA = ParseDate( pBreakDateA, ‘yyyyMMdd’,0 );

Above code change the string to a date number, but should be changed so it is not use the 1960 as start date.

if (nStartDateA < nBreakDateA);

Above code check if the date is before a specific date, you can change this to be automatic check for 12 months .

if (nofmonths > 12);

# if total is for from start date to today – you use the same formula

nMonthAmountA = pTotalAmountA / nOfMonths;

else;

Above code check if the difference is more than 12 months, then you can enhance it to calculate the value from January to today date only, if the total is only for this year.

# asciioutput (‘../logfiles/debuglog1.txt’, str(nBreakDateA,8,2),str(nStartDateA,8,2) , numbertostring(pTotalAmountA));

Remark out the debug asciioutput lines from your code.

More information:

http://www.wimgielis.com/tm1_newdateformatter_EN.htm

https://www.exploringtm1.com/date-time-functions-tm1-10-2/

https://www.exploringtm1.com/accumulating-values-in-tm1/

Operators in TI IF Statements