Product:

Planning Analytics 2.0.9.13 TM1_version=TM1-AW64-ML-RTM-11.0.913.10-0

Microsoft Windows 2019 server

Problem:

How make a drill process?

Solution:

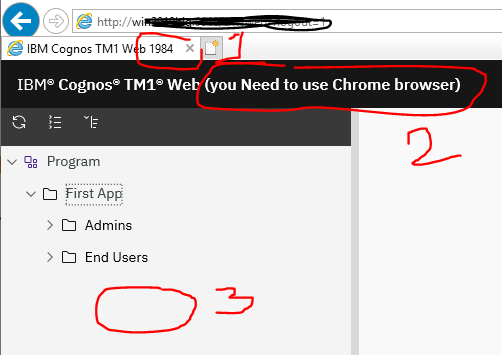

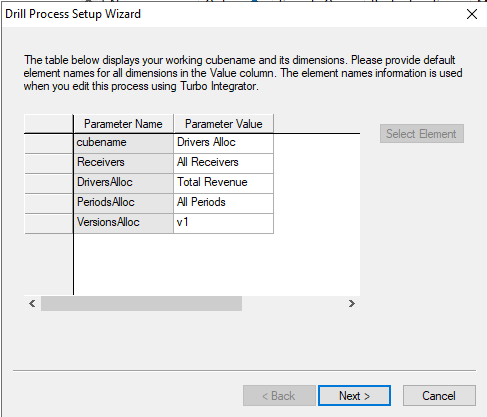

Inside sample application proven_techniques login as ADMIN and create a drill from cube “Drivers Alloc” to cube “employeedetails” (not the best example as they do not contain much information).

First right click on cube “Drivers Alloc” and select drill – create drill process.

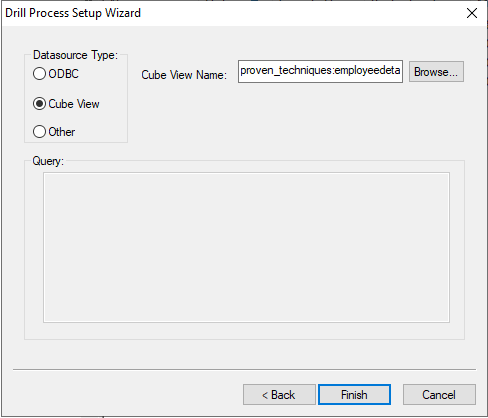

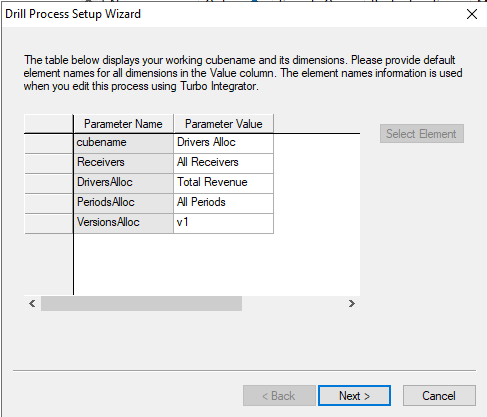

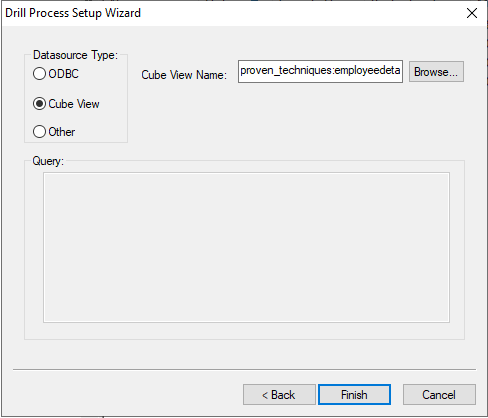

Click Next, and then select a cube view as source and select any view from that target cube.

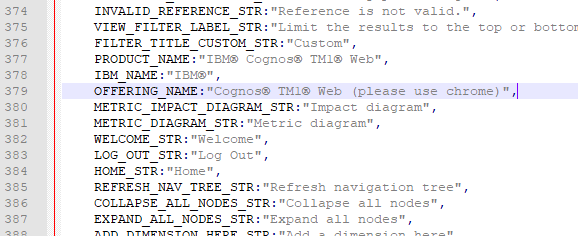

Click finish, and select a name like “TOemployees”. The hidden process }Drill_TOemployees is created.

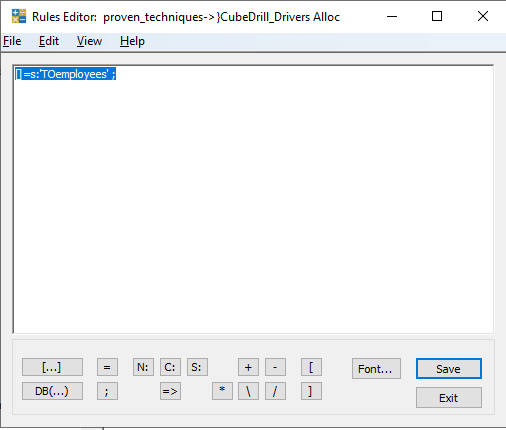

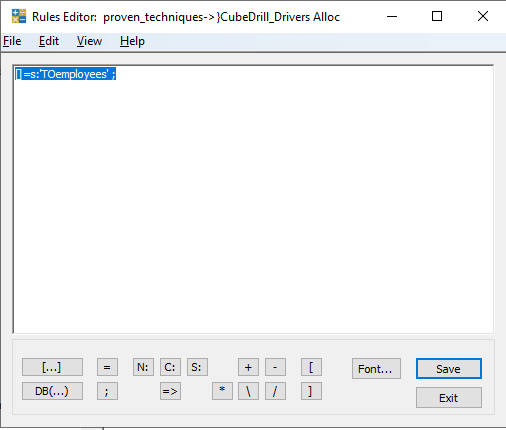

Right click on the cube “Drivers Alloc” and select drill – create drill assignment rule.

Enter in below in rule, to make all cells drill-able to the TI process TOemployees :

[]=s:'TOemployees' ;

Then save it.

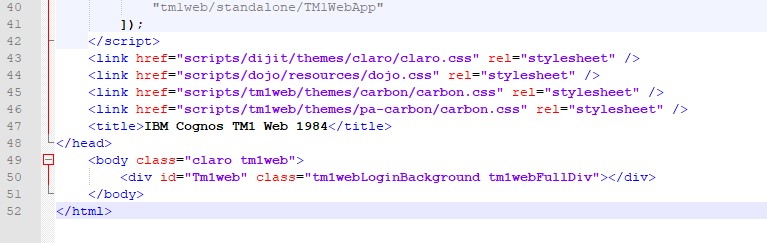

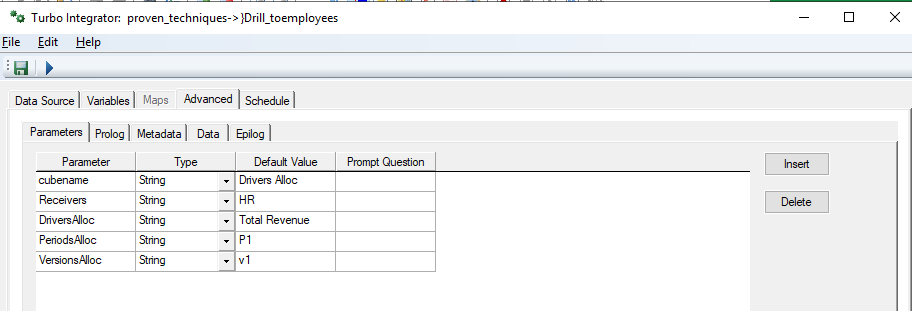

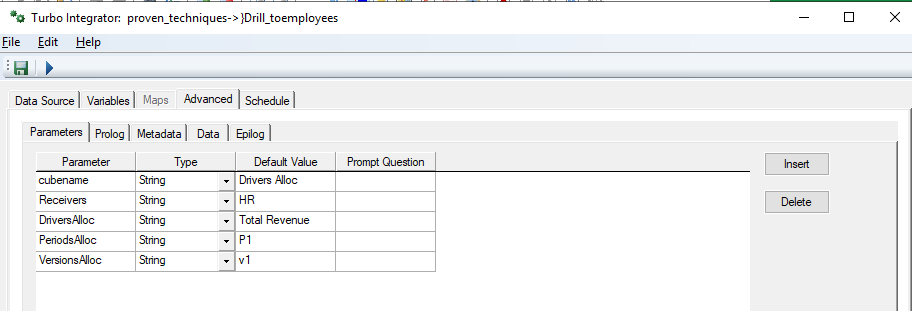

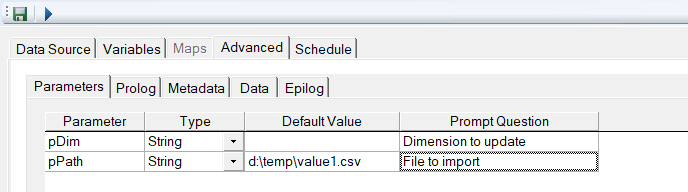

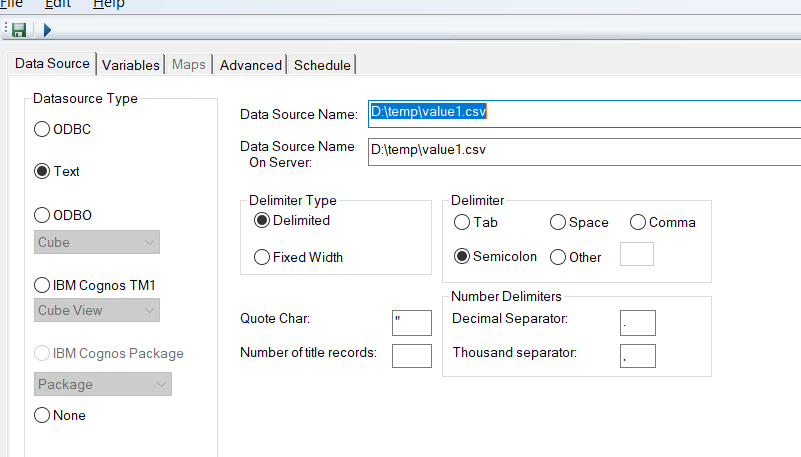

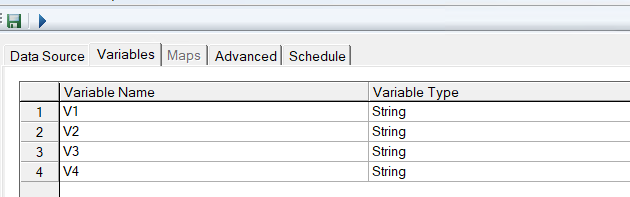

Then open the TI process to edit it. The first tabs i automatic populated from the creation cube.

In prolog add this code:

#****Begin: Generated Statements***

#****End: Generated Statements****

ByPass = 'YES';

# set the name of the target cube where the view is build

pCube = 'employeedetails' ;

# Specify dimension name

DimName1= 'employees' ;

DimName2 = 'budget' ;

DimName3 = 'departments' ;

DimName4 = 'employeedetails' ;

#Set Constants for Inserting into View

# Element1 = 'Total Employees' ; we should get only lowest member by MDX

Element2 = 'Budget' ;

# set the parameter we get from the drill function to make a selection in the view

Element3 = Receivers ;

# Element4 = 'Current Salary' ; we get all members by not including it in the code

# set view name to a uniq value

sView = 'Drill__' | pCube ;

sSubset = sView;

If( ViewExists( pCube, sView ) = 1 );

ViewDestroy( pCube, sView );

EndIf;

ViewCreate( pCube, sView );

# delete subset if it exist

If( SubsetExists( DimName1 , sSubset ) = 1 );

SubsetDestroy( DimName1 , sSubset );

EndIf;

# add all leaf elements to the dimension

sMDX = '{TM1FILTERBYLEVEL( {TM1SUBSETALL( [ ' | DimName1 | ' ] )}, 0)}' ;

SubsetCreateByMDX( sSubset, sMDX );

ViewSubsetAssign( pCube, sView, DimName1, sSubset );

# only include on elemet of the dimenstion in the view

If( SubsetExists( DimName2 , sSubset ) = 1 );

SubsetDeleteAllElements( DimName2 , sSubset );

Else;

SubsetCreate( DimName2 , sSubset );

EndIf;

SubsetElementInsert( DimName2, sSubset, Element2, 1 );

ViewSubsetAssign( pCube, sView, DimName2, sSubset );

#

If( SubsetExists( DimName3 , sSubset ) = 1 );

SubsetDeleteAllElements( DimName3 , sSubset );

Else;

SubsetCreate( DimName3 , sSubset );

EndIf;

SubsetElementInsert( DimName3, sSubset, Element3, 1 );

ViewSubsetAssign( pCube, sView, DimName3, sSubset );

# as dimname4 does not have a total,

# we leave it out and get all values in the view for that dimension.

# set what dimensions that should be in row and columns

ViewRowDimensionSet( pCube, sView, DimName1 , 1 );

ViewColumnDimensionSet( pCube, sView, DimName4 , 1 );

ViewTitleDimensionSet( pCube, sView, DimName3 );

# set standard values to exclude some cells

ViewExtractSkipCalcsSet( pCube, sView, 1 );

ViewExtractSkipRuleValuesSet( pCube, sView, 1 );

ViewExtractSkipZeroesSet( pCube, sView, 1 );

in epilog add this code:

IF (ByPass @= 'NO' ) ;

#****Begin: Generated Statements***

RETURNVIEWHANDLE('employeedetails','employeedetails');

#****End: Generated Statements****

ELSE;

RETURNVIEWHANDLE( pCube, sView ) ;

ENDIF;

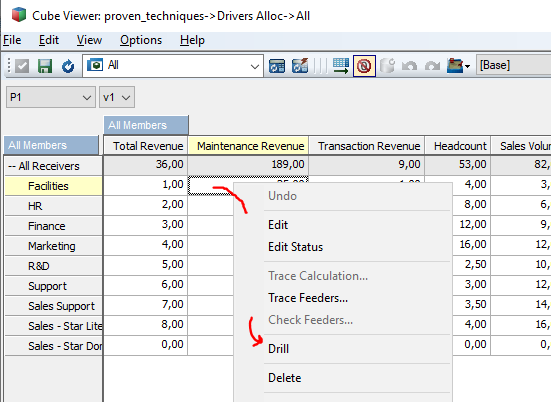

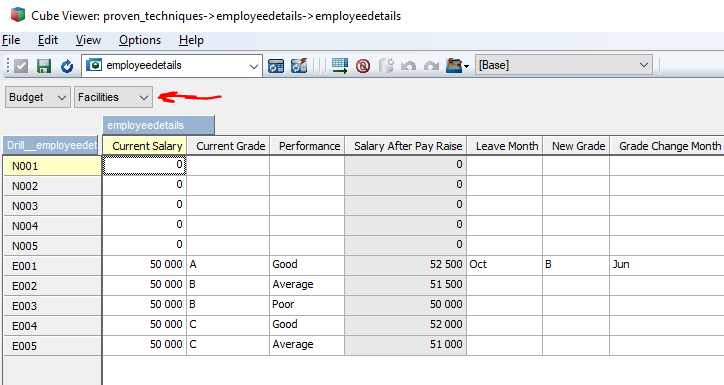

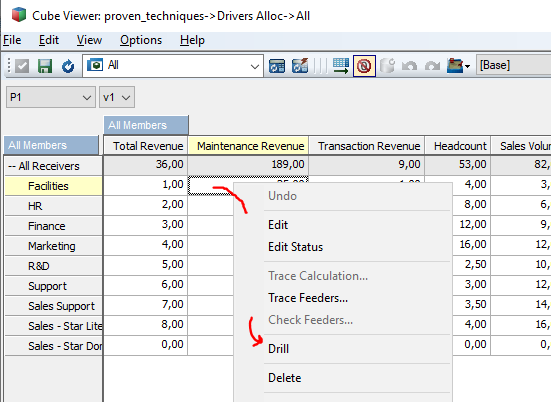

Click on the lowest cell and select Drill from the popup menu.

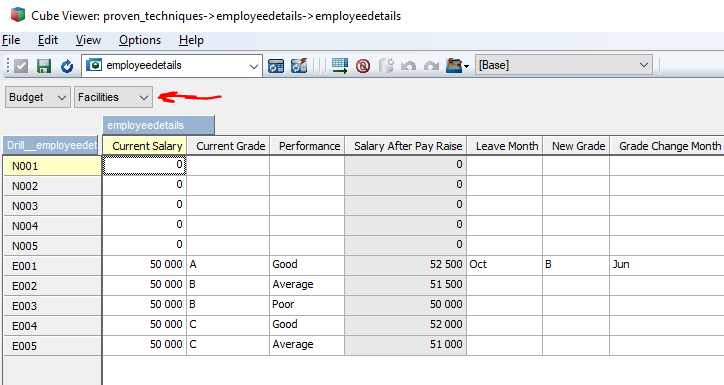

The view is shown (that is built by the TI process) – only value that change is the receivers dimension, as that parameter is used to build the view.

You can do a better solution by checking out below links.

More Information:

https://www.wimgielis.com/tm1_neatmdxtricks_EN.htm

http://yinsolutions.blogspot.com/search/label/7.%20Planning%20Analytics%20%2F%20TM1

https://pmsquare.com/analytics-blog/2020/4/13/pa-drilling-through-to-greater-detail

https://quebit.com/askquebit/ibm-planning-analytics-setting-up-basic-drill-through-functionality/

https://lodestarsolutions.com/tm1-drill-through-from-cube-to-cube-not-working/

https://blogs.perficient.com/2011/10/13/cognos-tm1-drill-through-3/

https://quebit.com/askquebit/creating-effective-source-and-zero-out-views-in-planning-analytics/

https://code.cubewise.com/ti-helper

https://www.bihints.com/book/export/html/68

https://exploringtm1.com/drill-through-processes/

. Specify either

. Specify either