Product:

Microsoft SQL Azure database

Issue:

How create a new database from a BACPAC file in SQL Azure?

The Azure portal only supports creating a single database in Azure SQL Database and only from a .bacpac file stored in Azure Blob storage.

Depending how your firewall and network endpoints are setup in the SQL azure, you can get different problems.

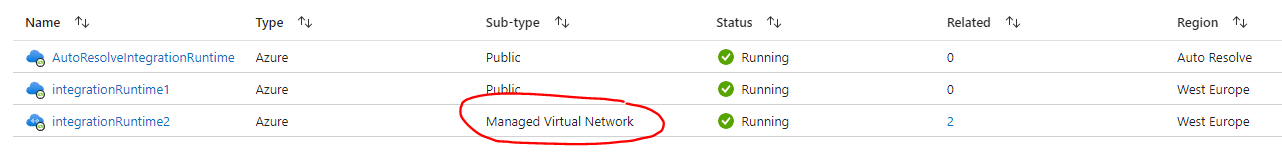

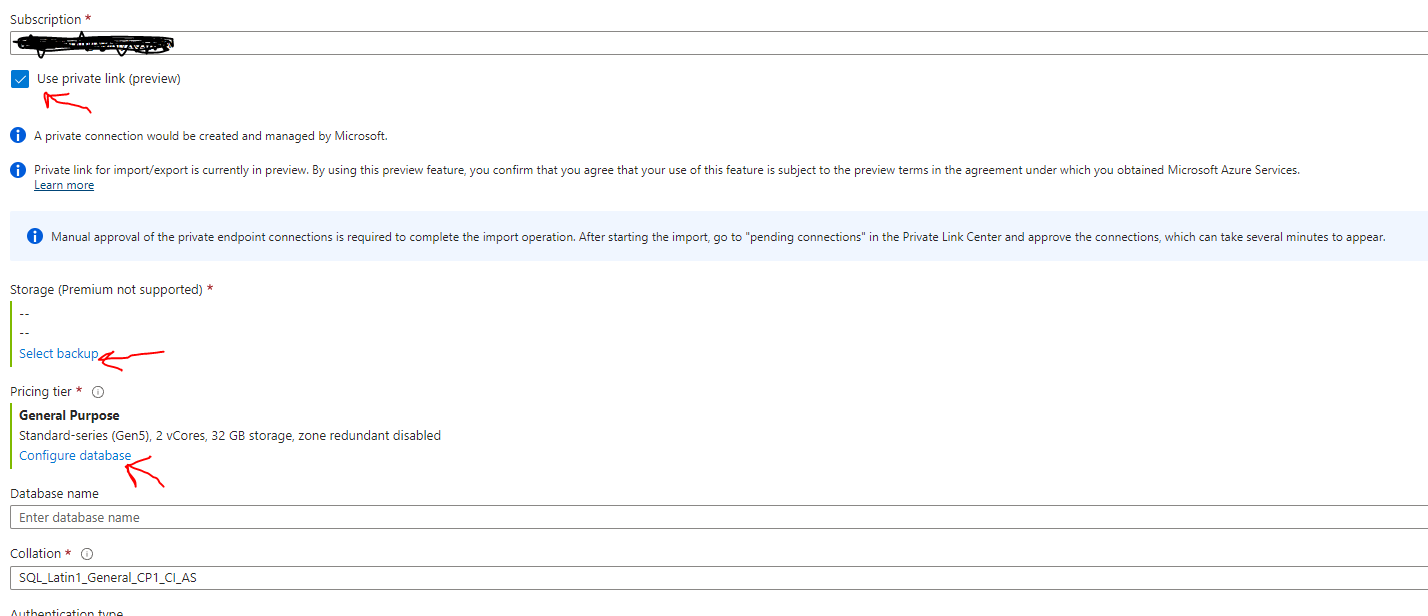

Error: The ImportExport operation failed because of invalid storage credentials, can be that you need to click in “use private link” for the subscription.

You need to select the backup bacpac file from your blob storage.

You should select the database model you want to get a big and valuable SQL Azure database created.

Enter a name that you can easy find from the other databases.

The ImportExport operation with Request Id 'xxxxx-xxxxx' failed due to 'The server principal "donald" is not able to access the database "sqldatabasename-new" under the current security context.

Cannot open database "sqldatabasename-new" requested by the login. The login failed.Error like above, can be that you do not have access to the database, the user account is not ADMIN on the SQL Azure server.

Other common issue can be that the BLOB storage is not allowing access from the SQL Azure database.

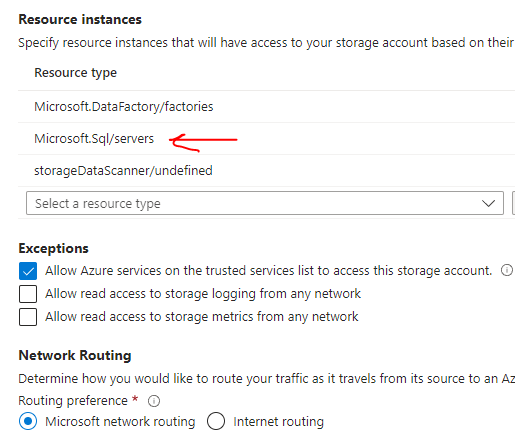

When doing the import above, you need to monitor both Private Endpoint Connections and SQL azure private access, to Approve the request for access that the above import process will ask for.

You can also may need to add the SQL server as resource type. But it may still fail, if you have not given access to the SQL server over the network from the BLOB storage.

Solution:

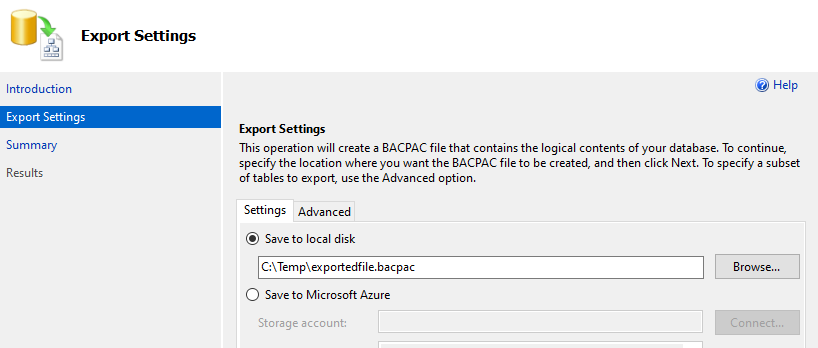

If you know you have access from your computer to the SQL azure server, then you should upload the DACPAC from you c:\temp folder with a powershell script.

.\SqlPackage.exe /Action:Import /SourceFile:"C:\temp\sqldatabasfilesavedname.bacpac" /TargetConnectionString:"Server=tcp:sqlservername.database.windows.net,1433;Initial Catalog=databasename_test_restore;Authentication=Active Directory Password;MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;User ID=donald.duck@company.com;Password=xxxxxxx" /p:DatabaseEdition=Standard /p:DatabaseServiceObjective=S4

First you need to install the DacFramework.msi from https://learn.microsoft.com/en-us/sql/tools/sqlpackage/sqlpackage-download?view=sql-server-ver16

Then start powershell as administrator and go to the folder C:\Program Files\Microsoft SQL Server\160\DAC\bin, and run above powershell script after you change the names to match yours.

Parameters used in script:

.\SqlPackage.exe /Action:Import

= start the process and what action we do – in this case import

/SourceFile:”C:\temp\sqldatabasfilesavedname.bacpac”

= tell the location and filename of the file to restore from

/TargetConnectionString:”Server=tcp:sqlservername.database.windows.net,1433;

= tell protocol and name of SQL server and port the script should use

Initial Catalog=databasename_test_restore;

= tell the new name of the database in SQL azure

Authentication=Active Directory Password;MultipleActiveResultSets=False;Encrypt=True;TrustServerCertificate=False;Connection Timeout=30;

= set parameters that you will login with active directory

User ID=donald.duck@company.com;

= enter the name of a administrator – this account should not be specified in the database

Password=xxxxxxx”

= enter the password for the account

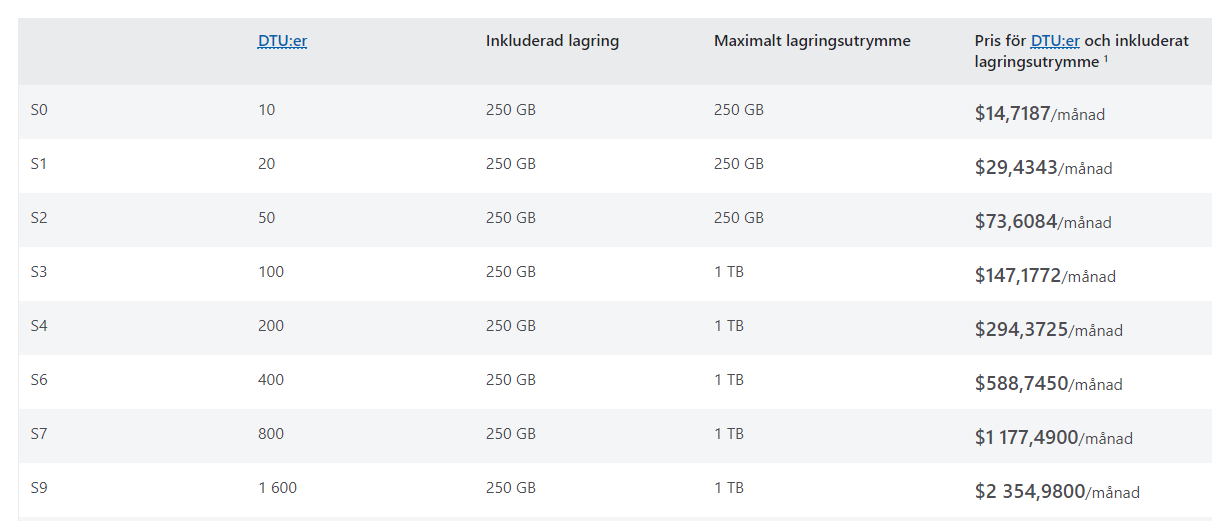

/p:DatabaseEdition=Standard

= set the version of SQL Azure database you want in the subscription

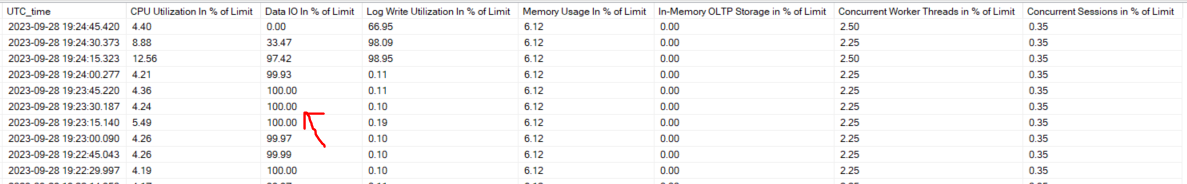

/p:DatabaseServiceObjective=S4

= set the number of DTU you will use first in your new database

When it does the import – it start with this – check the line for servername to ensure it is correct

Importing to database ‘databasename_test_restore’ on server ‘tcp:sqlservername.database.windows.net,1433’.

Creating deployment plan

Initializing deployment

Verifying deployment plan

Analyzing deployment plan

Importing package schema and data into database

Updating database

Importing data

Processing Import.

Disabling indexes.

….

When done it should say something like this:

Successfully imported database.

Changes to connection setting default values were incorporated in a recent release. More information is available at https://aka.ms/dacfx-connection

Time elapsed 0:13:32.42

If you get below error, it can be that you are on VPN, and need to be at the office instead, to be allowed to connect to the Azure SQL Server.

The ImportExport operation with Request Id ‘xxxxx-xxxx’ failed due to ‘An error occurred while communicating with the SQL Server using AdPassword-login: AADSTS50076: Due to a configuration change made by your administrator, or because you moved to a new location, you must use multi-factor authentication to access ‘xxxxx-xxxxxx’

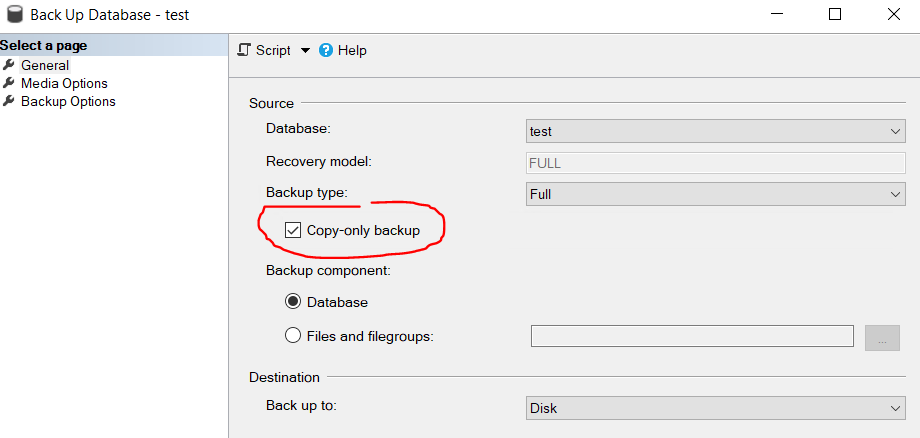

If you get below error, the account you gave, already exist in the DACPAC file, you need to remove the user from the database, and do a new extract of data to dacpac.

Error SQL72014: Framework Microsoft SqlClient Data Provider: Msg 15063, Level 16, State 1, Line 1 The login already has an account under a different user name.

Error SQL72045: Script execution error. The executed script:

CREATE USER xxxxxxx

You may need to remove logins for the user account used at the import, on the database that you see exist at the server level (on the master database).

To see server accounts, run below on master database:

SELECT A.name as userName, B.name as login, B.Type_desc, default_database_name, B.*

FROM sys.sysusers A

FULL OUTER JOIN sys.sql_logins B

ON A.sid = B.sid

WHERE islogin = 1 and A.sid is not nullMore Information:

SqlPackage /a:import /tcs:”Data Source=<serverName>.database.windows.net;Initial Catalog=<migratedDatabase>;User Id=<userId>;Password=<password>” /sf:AdventureWorks2008R2.bacpac /p:DatabaseEdition=Premium /p:DatabaseServiceObjective=P6

SqlPackage /a:Import /sf:testExport.bacpac /tdn:NewDacFX /tsn:apptestserver.database.windows.net /ua:True /tid:”apptest.onmicrosoft.com”

https://learn.microsoft.com/en-us/sql/tools/sqlpackage/sqlpackage-import?view=sql-server-ver16

As an alternative to username and password, you can use Microsoft Entra ID (formerly Azure Active Directory). Currently, the Import/Export service does not support Microsoft Entra ID authentication when MFA is required. Substitute the username and password parameters for /ua:true and /tid:"yourdomain.onmicrosoft.com". This example shows how to import a database using SqlPackage with Microsoft Entra authentication:

SqlPackage /a:Import /sf:"c:\temp\theExportfileyouCreated.bacpac" /tdn:TheNewNameofDatabase /tsn:targetazuresqlservername.database.windows.net /ua:True /tid:"apptest.onmicrosoft.com"add at the end of the line /p:DatabaseEdition=Standard /p:DatabaseServiceObjective=S4 to get the DTU configuration you want.