Product:

Microsoft Power BI portal

Issue:

When refresh a powerbi report ( e.g. Semantic model ) we got a error – this report worked before in the Power BI portal workspace. Error is like:

Data source error{“error”:{“code”:”DMTS_OAuthTokenRefreshFailedError”,”pbi.error”:{“code”:”DMTS_OAuthTokenRefreshFailedError”,”details”:[{“code”:”DM_ErrorDetailNameCode_UnderlyingErrorMessage”,”detail”:{“type”:1,”value”:” Device is not in required device state: compliant. Conditional Access policy requires a compliant device, and the device is not compliant. The user must enroll their device with an approved MDM provider like Intune…

Solution:

You must be in Microsoft Edge web browser to do this change, if you do the change in Chrome, it will not work.

On your reports Semantic model – click on the 3 dots and select “settings”.

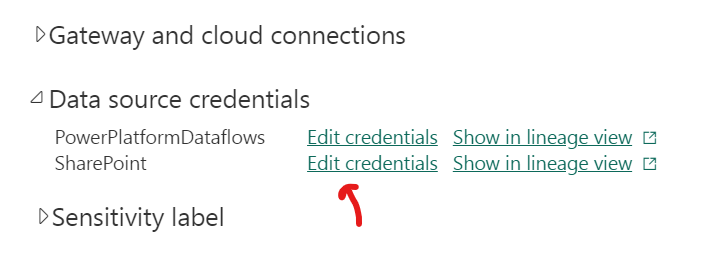

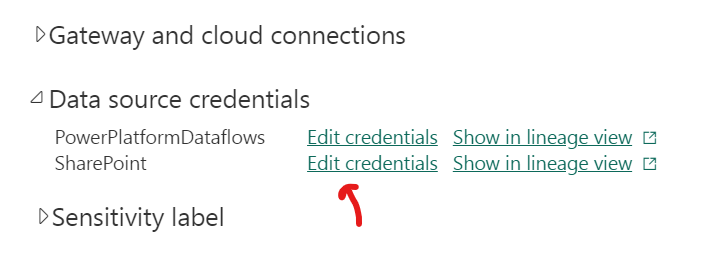

Go to data source credentials and click on edit credntials that is marked as not working.

And enter your windows account credentials again.

Now it should be green, and this login is affecting all your reports – if the access point is the same.

Now click the “refresh” icon to update you PowerBi report semantic model in the portal.

If above does not work, try below.

Download the Semantic model to you computer.

Restart your computer.

Login to your company windows account.

Open the PowerBI pbix file, and refresh the report.

You will be prompted to login to the datasource.

Enter your credintials for windows account.

When the report is refreshed and working in PowerBI on your computer.

Save the report.

Publish the report to the powerbi portal – overwrite the previus report.

More Information:

https://learn.microsoft.com/en-us/entra/identity/conditional-access/concept-conditional-access-conditions

Blood, Sweat, and built-in compliance policy

There is a known issue with the Chrome browser that can cause this error to occur. If there is no device information sent in the sign-in logs, this might be the problem. Device information is sent when there is a PRT and the user is logged onto the browser. If the user is using Chrome, the Windows 10 accounts extension is needed.

If this is the case, you can test by asking the user to logon to the Edge browser or install the Windows 10 accounts extension to see if the issue is resolved.

https://learn.microsoft.com/en-us/azure/active-directory/conditional-access/concept-conditional-access-conditions

If they are signing in using Edge, they cannot use an incognito window because it will not pass the device state.

Is Microsoft Authenticator App is installed on the device? As Microsoft Authenticator is an broker app for iOS and would be needed to pass MFA and Device claims to Azure AD.

Sign-ins from legacy authentication clients also do not pass device state information to Azure AD.

Some years ago Microsoft stated that the in-app browser must be using a supported browser such as Edge, however, the Windows store uses Edge, and it also does not pass the device ID, so the conditional access policy can’t be compliant.

Many 3rd party applications use in-app browsers that are “not supported,” and it appears that Microsoft doesn’t offer the appropriate developer documentation that would allow 3rd parties to include this conditional access device information in their in-app browsers, even if they used Edge.

These are the links that were provided as dev resources:

https://www.graber.cloud/en/aadsts50131-device-not-required-state/

https://cloudbrothers.info/entra-id-azure-ad-signin-errors/

You may need to do below in chrome to get it to work…

this is the exact requirements (Chome-side, your Azure AD setup has its own stuff) you needed:

Latest “Chrome Enterprise Policy List”: https://support.google.com/chrome/a/answer/187202?hl=en

GPO Settings

User Configuration\Policies\Administrative Templates\Google\Google Chrome\HTTP Authentication

-Kerberos delegation server whitelist

autologon.microsoftazuread-sso.com,aadg.windows.net.nsatc.net

-Authentication server Whitelist

autologon.microsoftazuread-sso.com,aadg.windows.net.nsatc.net

# Needed if you’re blocking extensions from being installed to whitelist this one

User Configuration\Policies\Administrative Templates\Google\Google Chrome\Extensions

-Configure the list of force-installed apps and extensions (Enabled)

ppnbnpeolgkicgegkbkbjmhlideopiji

-Configure extension installation allow list (Enabled)

ppnbnpeolgkicgegkbkbjmhlideopiji

Note: That extension ID I pulled from https://chrome.google.com/webstore/detail/windows-accounts/ppnbnpeolgkicgegkbkbjmhlideopiji (Windows Accounts)

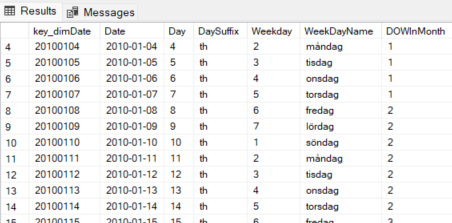

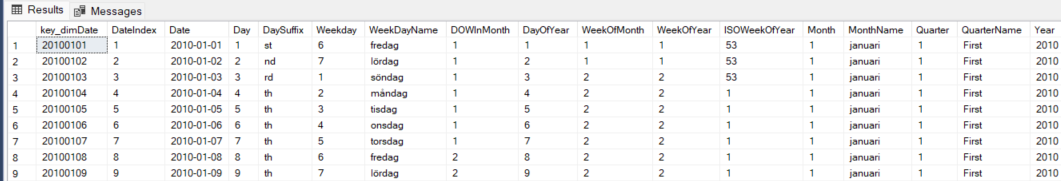

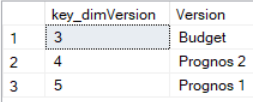

The key value for the version need to be collected from the version table.

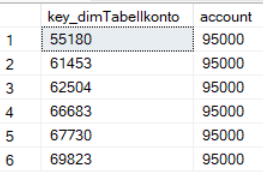

The key value for the version need to be collected from the version table. The account value you want to update in fact table may have more than one key value, that you need to check and include.

The account value you want to update in fact table may have more than one key value, that you need to check and include.