Product:

Planning Analytics 2.0.9.3

Microsoft Windows 2019 server

issue:

How install tm1py to server that is not connected to internet?

https://code.cubewise.com/tm1py-help-content/installing-tm1py-on-a-server-with-no-internet-connection

Solution:

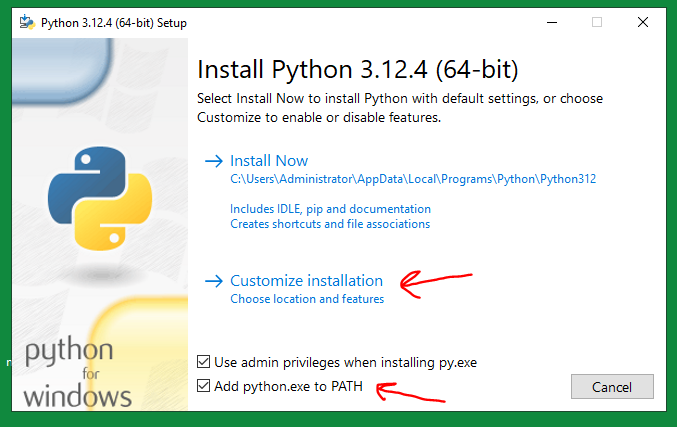

Download python to your laptop and to the server, and install python on both. https://www.python.org/downloads/

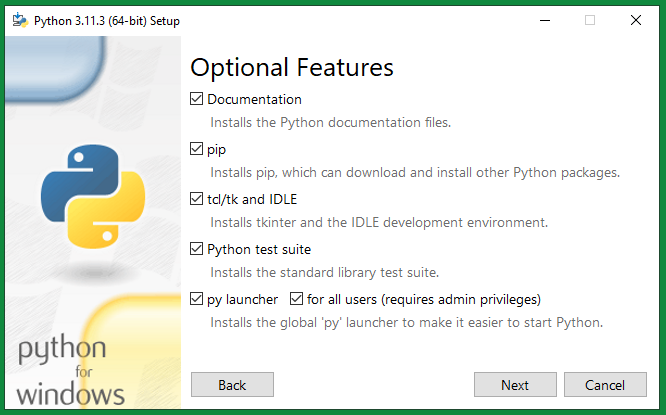

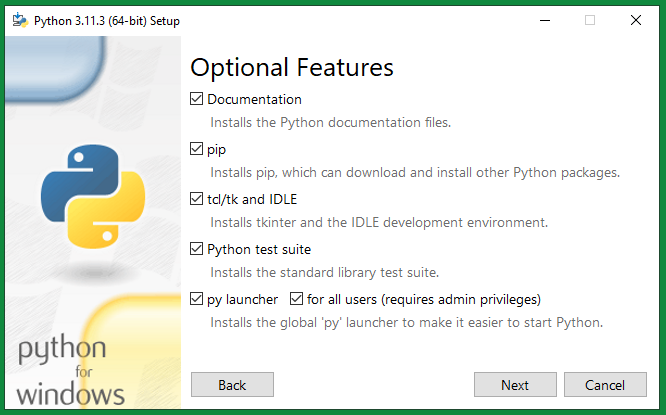

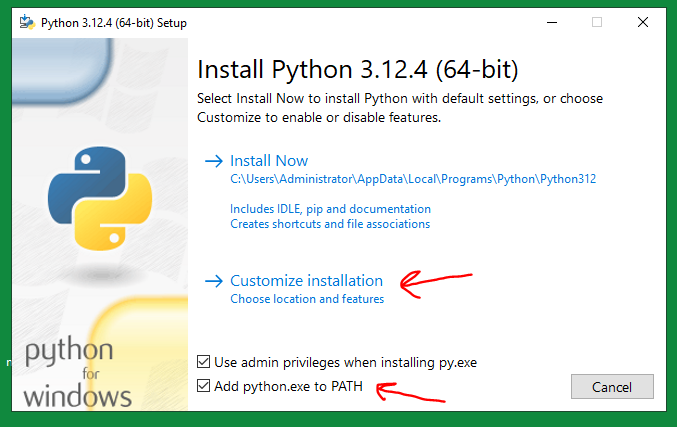

Run the python-3.11.3-amd64.exe to install the software.

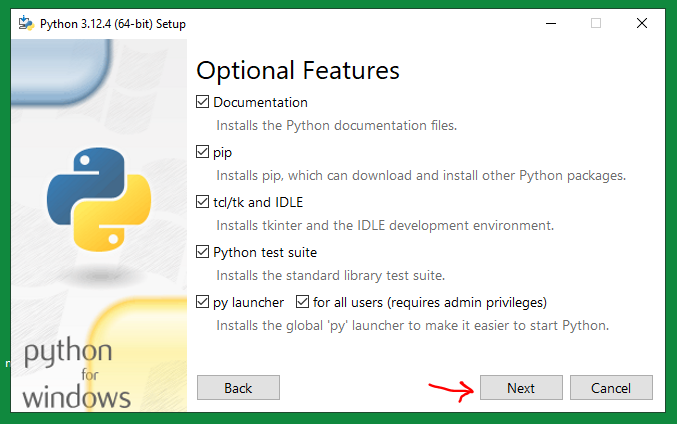

Select Customize installation. (ensure you click “add python to path” )

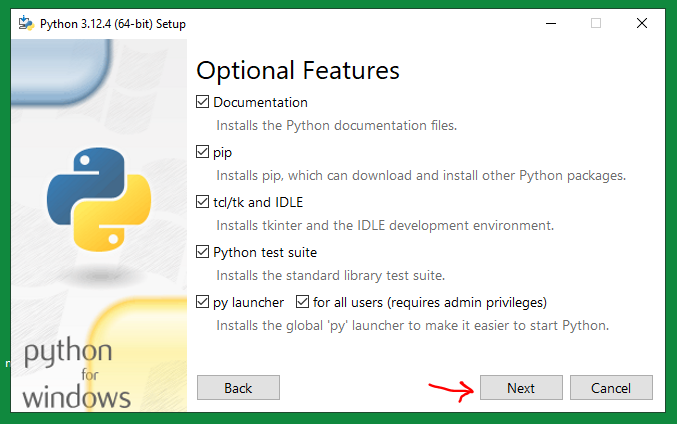

Click Next

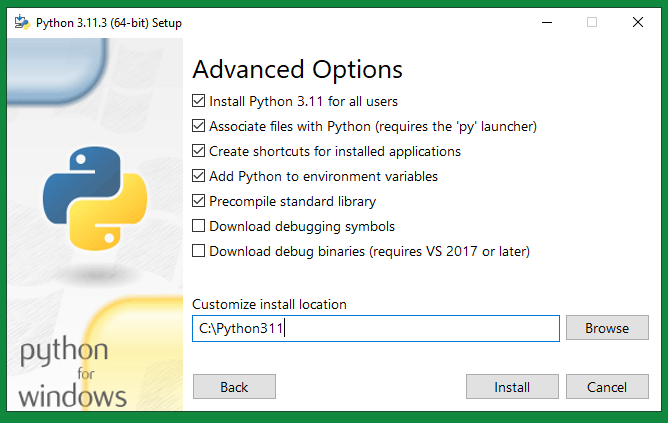

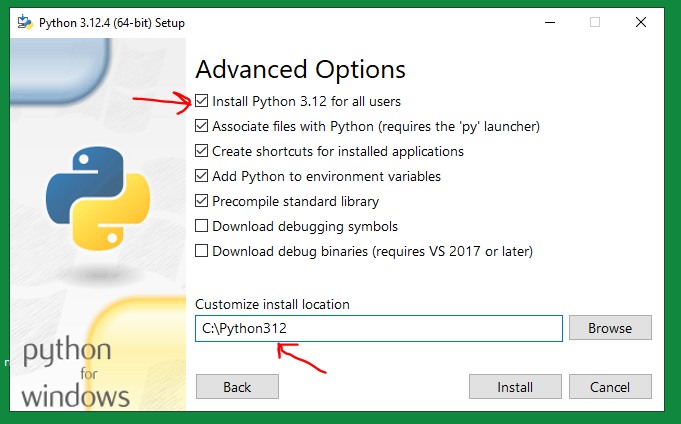

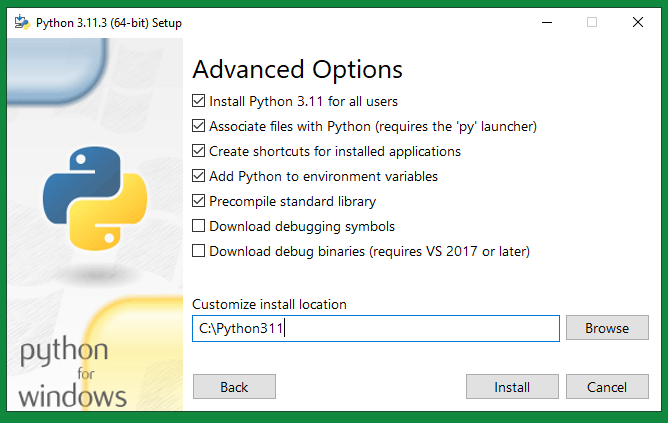

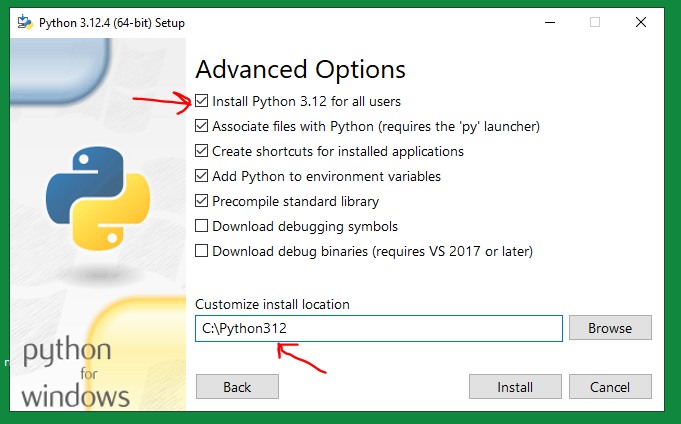

Select install python for all users, and change the path to a simpler one outside program files.

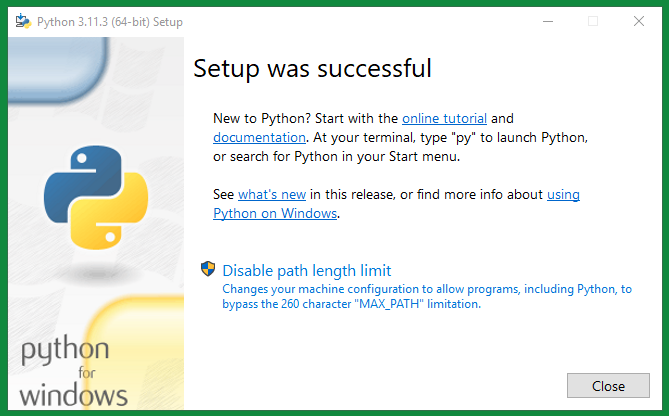

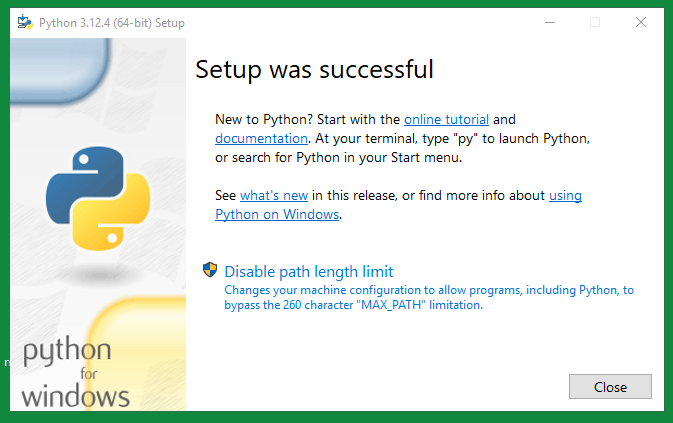

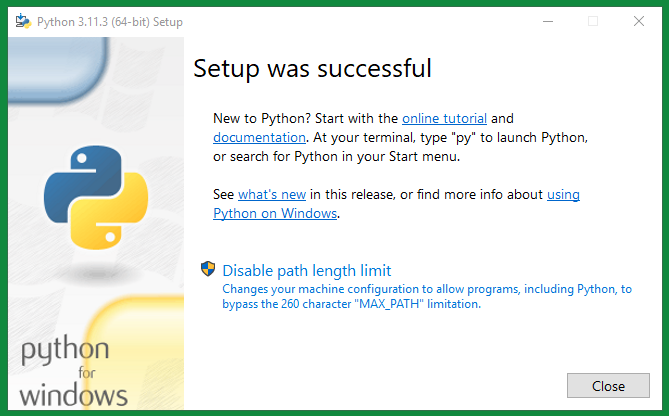

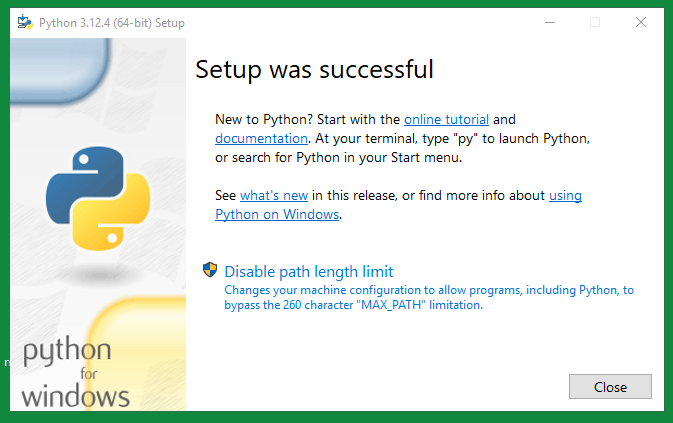

Click Close when done.

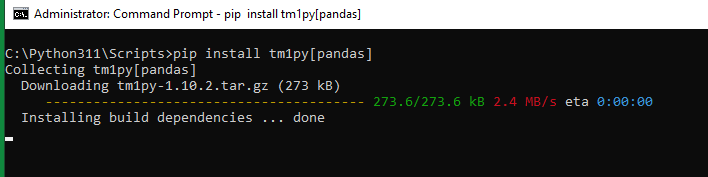

On your laptop (that is connected to internet) go to your python folder. Inside the command prompt enter:

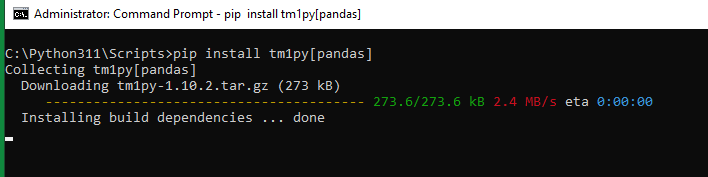

pip install tm1py[pandas]

When done – run below command, to ensure you have the requirements: (https://github.com/cubewise-code/tm1py)

pip install requests_negotiate_sspi

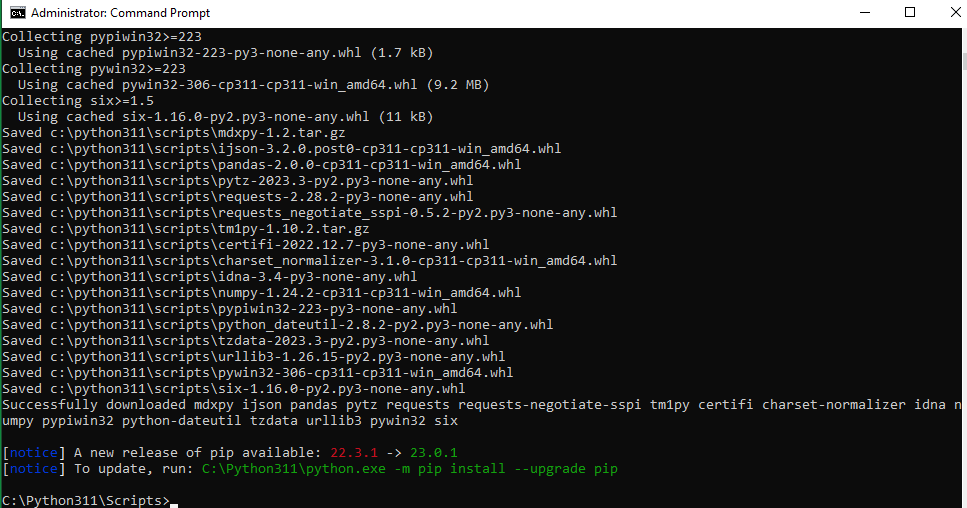

Create the folder c:\temp\offline. Move to that folder in command window and run the command:

pip freeze > C:/temp/offline/requirements.txt

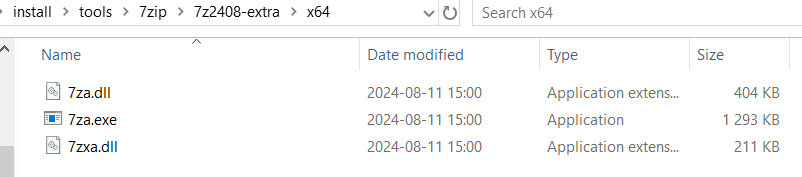

Zip all the needed files with this command (run the command from the folder where you want the zip files to be created):

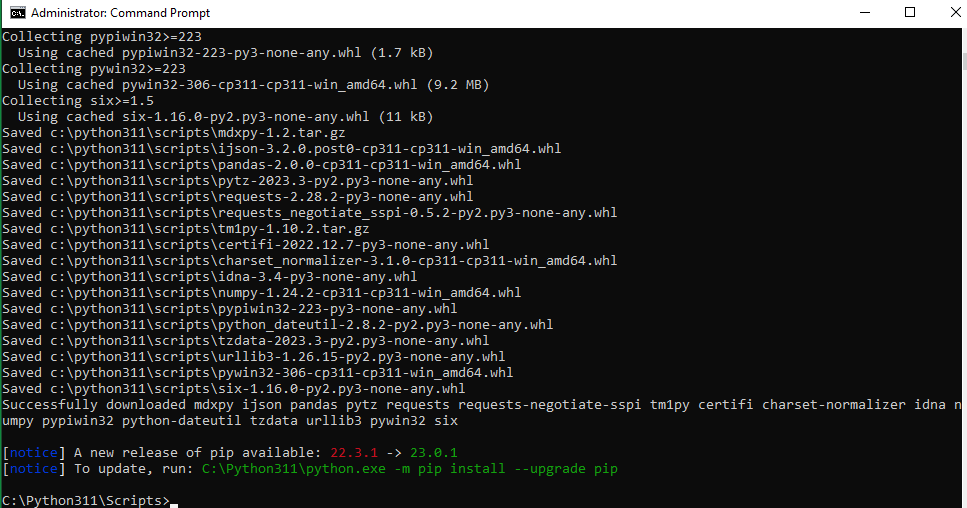

pip download tm1py[pandas]

The versions above listed, depend on the version you have installed of python and tm1py. You may get different versions.

If you get errors later, in most cases, it is because we have not succeeded to get the right files to this folder.

Now copy the files over to the server from the laptop. (copy all files to the same folder)

certifi==2022.12.7

charset-normalizer==3.1.0

idna==3.4

ijson==3.2.0.post0

mdxpy==1.2

numpy==1.24.2

pandas==2.0.0

pypiwin32==223

python-dateutil==2.8.2

pytz==2023.3

pywin32==306

requests==2.28.2

requests-negotiate-sspi==0.5.2

six==1.16.0

TM1py==1.10.2

tzdata==2023.3

urllib3==1.26.15

Above a example of the req.txt file content for tm1py needed files.

Copy the whl files in c:\python311\scripts over to the servers c:\python311\scripts folder (or the folder you are using like c:\temp\offline).

If you copy between virtual machines, in vbox, you access the local host drive with \\Vboxsvr\c_drive\temp.

How to create shared folder for virtual machine on VirtualBox

It is important that the files are in the same folder, otherwise you get:

C:\Python311\Scripts>C:\Python311\scripts\pip install –no-index –find-links C:/temp/offline -r requirements.txt

ERROR: Could not open requirements file: [Errno 2] No such file or directory: ‘requirements.txt’

C:\temp\offline>C:\Python311\scripts\pip install –no-index –find-links C:/temp/offline -r requirements.txt

Looking in links: c:/temp/offline

ERROR: Could not find a version that satisfies the requirement certifi==2022.12.7 (from versions: none)

ERROR: No matching distribution found for certifi==2022.12.7

C:\Python311\Scripts>C:\Python311\scripts\pip install –no-index –find-links -r requirements.txt

Looking in links: -r

WARNING: Location ‘-r’ is ignored: it is either a non-existing path or lacks a specific scheme.

ERROR: Could not find a version that satisfies the requirement requirements.txt (from versions: none)

HINT: You are attempting to install a package literally named “requirements.txt” (which cannot exist). Consider using the ‘-r’ flag to install the packages listed in requirements.txt

ERROR: No matching distribution found for requirements.txt

C:\Python311\Scripts>C:\Python311\scripts\pip install –no-index –find-links c:\python311\scripts -r requirements.txt

Looking in links: c:\python311\scripts

Processing c:\python311\scripts\certifi-2022.12.7-py3-none-any.whl

Processing c:\python311\scripts\charset_normalizer-3.1.0-cp311-cp311-win_amd64.whl

Processing c:\python311\scripts\idna-3.4-py3-none-any.whl

Processing c:\python311\scripts\ijson-3.2.0.post0-cp311-cp311-win_amd64.whl

Processing c:\python311\scripts\mdxpy-1.2.tar.gz

Installing build dependencies … error

error: subprocess-exited-with-error

× pip subprocess to install build dependencies did not run successfully.

│ exit code: 1

╰─> [3 lines of output]

Looking in links: c:\python311\scripts

ERROR: Could not find a version that satisfies the requirement setuptools (from versions: none)

ERROR: No matching distribution found for setuptools

[end of output]

note: This error originates from a subprocess, and is likely not a problem with pip.

error: subprocess-exited-with-error

× pip subprocess to install build dependencies did not run successfully.

│ exit code: 1

╰─> See above for output.

note: This error originates from a subprocess, and is likely not a problem with pip.

Enter -vvv last to get more information about the issue;

pip install –no-index –find-links c:\python311\scripts -r requirements.txt -vvv

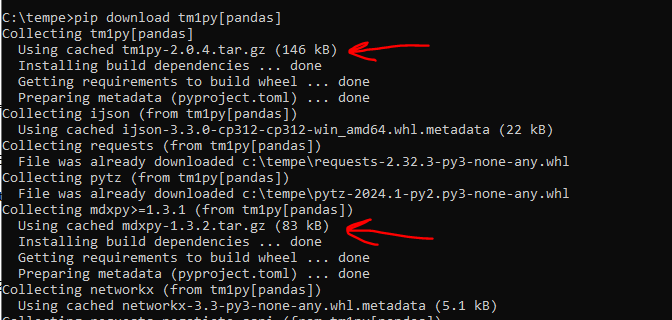

Maybe a solution, check what files you get issues with, if it is the files that end with tar.gz, then search on the laptop you have it installed on to find the whl file for the same function. Search for mdxpy* if you get error at mdxpy-1.3.2.tar.gz.

You will find the file in a folder like C:\Users\Administrator\AppData\Local\pip\cache\wheels\f8\6d\90\4bfbb1a9cc872dbc6e440d1f4ec64cb896cc724e3a9d29fc92

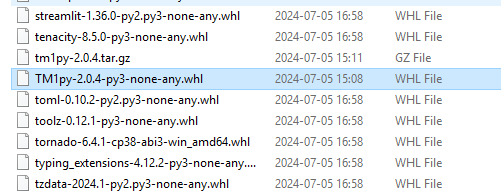

copy the file mdxpy-1.3.2-py3-none-any.whl to the folder where you have the other files you need. (like c:\temp\offline)

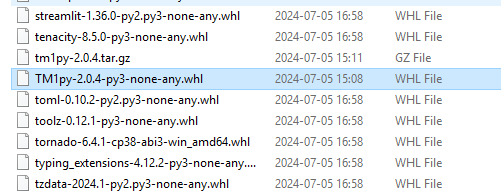

Repeat for file TM1py-2.0.4-py3-none-any.whl, so you get a whl file from the cache to the other computer and place in the folder where you install from.

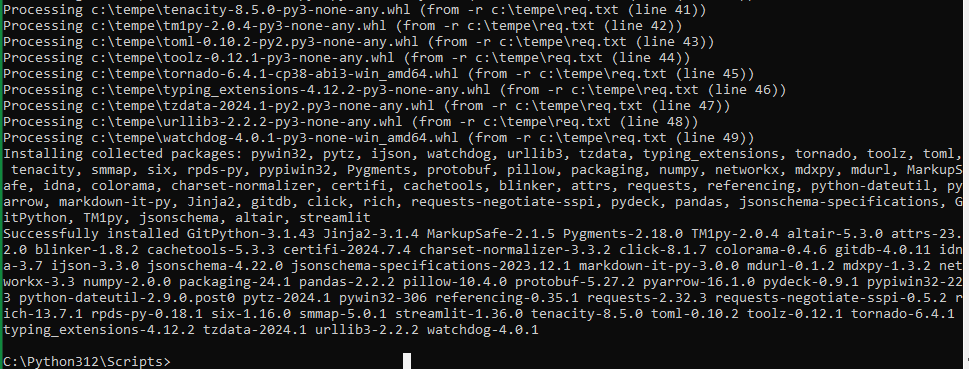

Try then again with the command similar to this:

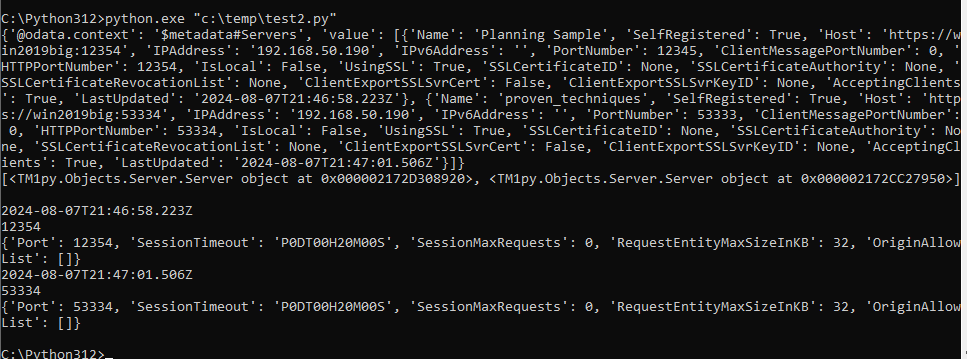

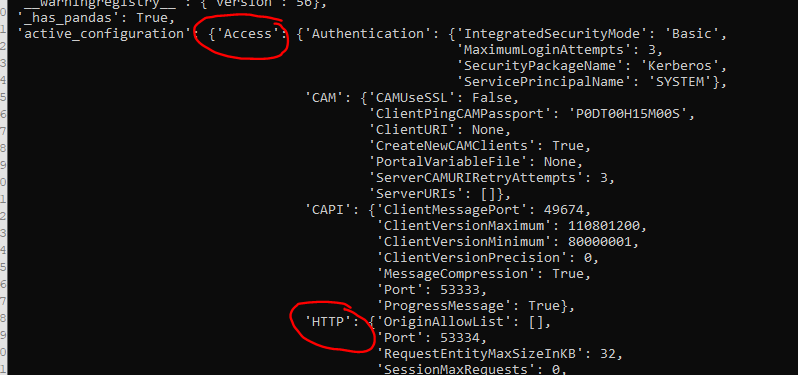

c:\python312\scripts\pip install --no-index --find-links C:\tempd -r c:\tempd\req.txt

The clue is to have all the whl files you need in the folder where you install the things from.

If you get;

ERROR: Could not open requirements file: [Errno 2] No such file or directory: ‘requirements.txt’

Try this command after you copy the txt file to the scripts folder

C:\Python311\Scripts>pip install --no-index --find-links c:\python311\scripts -r requirements.txt -vvv

You can try to edit the txt file to have the number listed in the error, gives that the error goes away, but you get a new error.

C:\tempas>pip install –no-index –find-links c:\tempas\ -r requirements.txt

Looking in links: c:\tempas\

Processing c:\tempas\certifi-2023.11.17-py3-none-any.whl

Processing c:\tempas\charset_normalizer-3.3.2-cp311-cp311-win_amd64.whl

Processing c:\tempas\idna-3.6-py3-none-any.whl

ERROR: Could not find a version that satisfies the requirement ijson==3.2.0.post0 (from versions: 3.2.3)

ERROR: No matching distribution found for ijson==3.2.0.post0

When you try to install several times to the same computer, think that the pip program will load files from the local cache folder first, and therefor the installation can fail – even you are writing the correct command to do the work.

== The last Solution to try are below ==

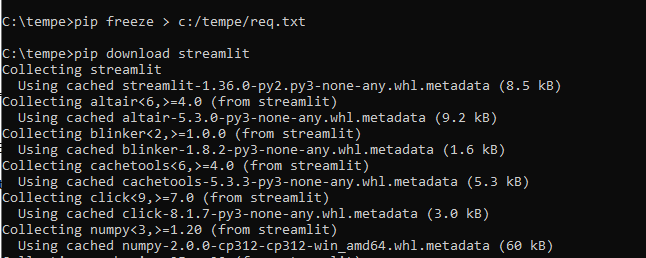

To repeat the steps you need to do to install a python package on a air-gapped computer:

On the computer where you have internet contact;

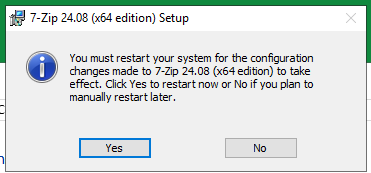

Install the latest python package (python-3.12.4-amd64.exe ), install the same package on the air-gapped computer.

Ensure that during installation you select, install for all users and add to path. Then go to the command prompt and run:

pip install tm1py[pandas] streamlit

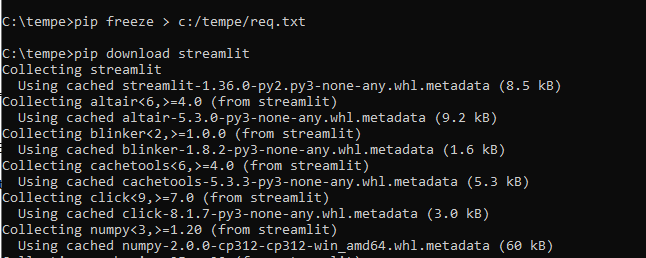

pip freeze > c:/tempe/req.txt

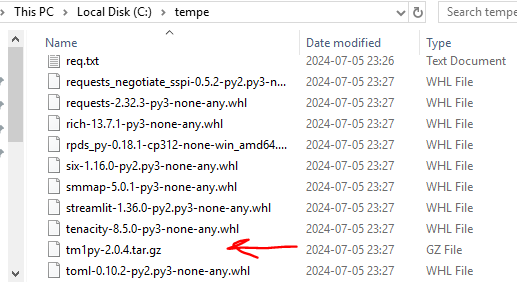

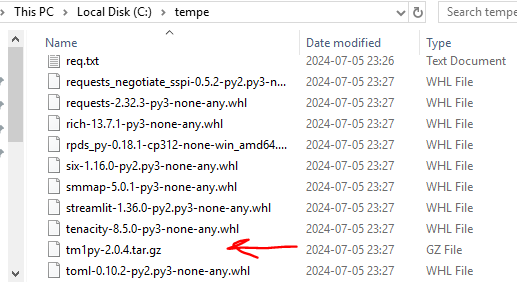

Go to the tempe folder and run below command

pip download streamlit

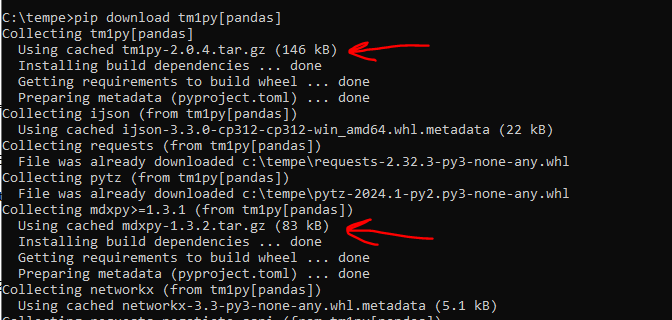

pip download tm1py[pandas]

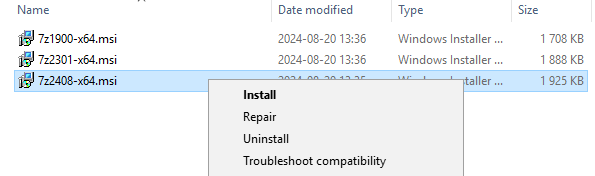

Here you get the gz file, but it looks like you need the whl file. Copy that from the cache to the c:\tempe folder. You may need these files:

mdxpy-1.3.2-py3-none-any.whl

TM1py-2.0.4-py3-none-any.whl

ijson-3.3.0-cp312-cp312-win_amd64.whl

So you have all needed whl files in the folder. The version number can be different if you install a different version of tm1py.

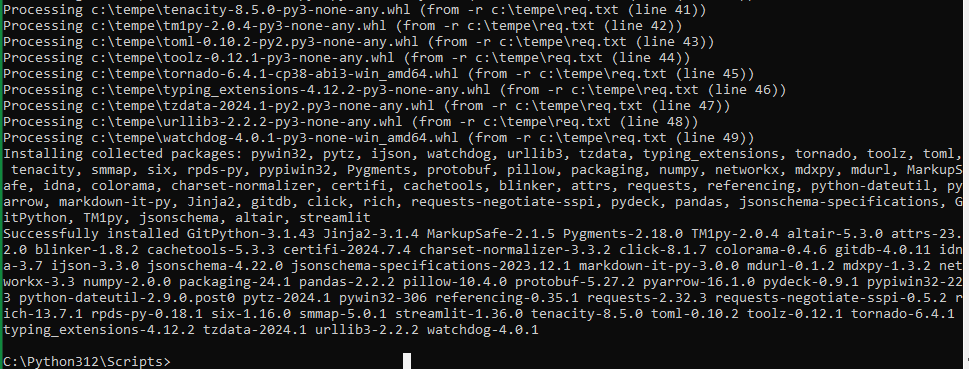

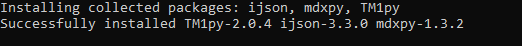

Copy the \tempe\ folder over to the air-gapped computer and run command:

c:\python312\scripts\pip install --no-index --find-links C:\tempe -r c:\tempe\req.txt

Hopefully it works easier for you to do the installation on your computer. (Below when the pip command works fine).

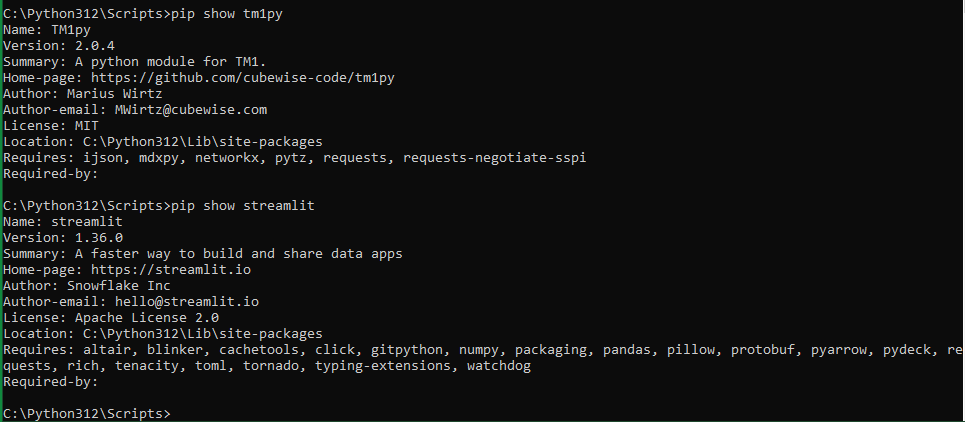

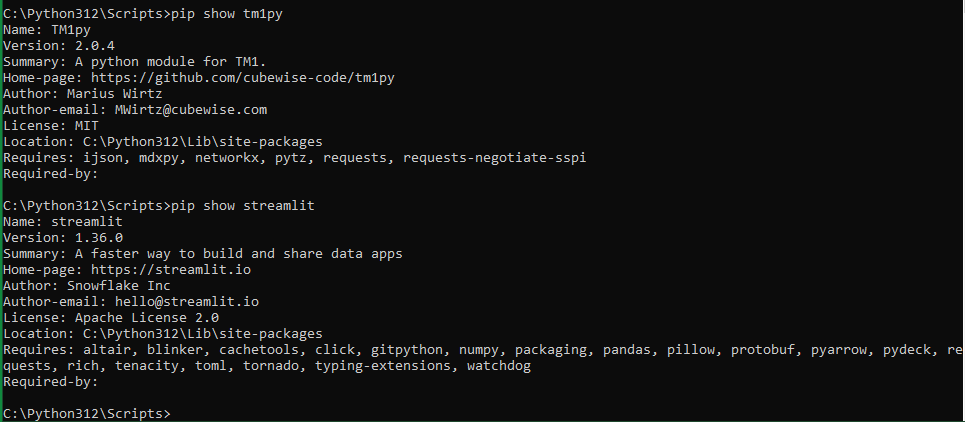

To check if tm1py is installed enter: pip show tm1py

Name: TM1py

Version: 1.10.2

Summary: A python module for TM1.

Home-page: https://github.com/cubewise-code/tm1py

Author: Marius Wirtz

Author-email: MWirtz@cubewise.com

License: MIT

Location: C:\Python311\Lib\site-packages

Requires: ijson, mdxpy, pytz, requests, requests-negotiate-sspi

Required-by:

How the latest ( https://www.python.org/downloads/ ) python install package steps look like:

More information:

Share Folders between Host and Guest OS in VirtualBox

https://bobbyhadz.com/blog/python-could-not-find-a-version-that-satisfies-the-requirement

https://bobbyhadz.com/blog/python-the-script-is-installed-in-which-is-not-on-path

Add python to path by using the keyboard shortcut (Win+Pause) to get to settings and under advance link, click on “Environment Variables” button.

https://www.activestate.com/resources/quick-reads/how-to-download-python-dependencies/

https://learn.microsoft.com/en-us/azure-data-studio/notebooks/notebooks-python-offline-installation

http://srikanthtechnologies.com/blog/python/installing_libraries_offline.aspx

https://www.python.org/downloads/release/python-350/

https://cubewise-code.github.io/tm1py-tales/2022/tm1py-web-app.html

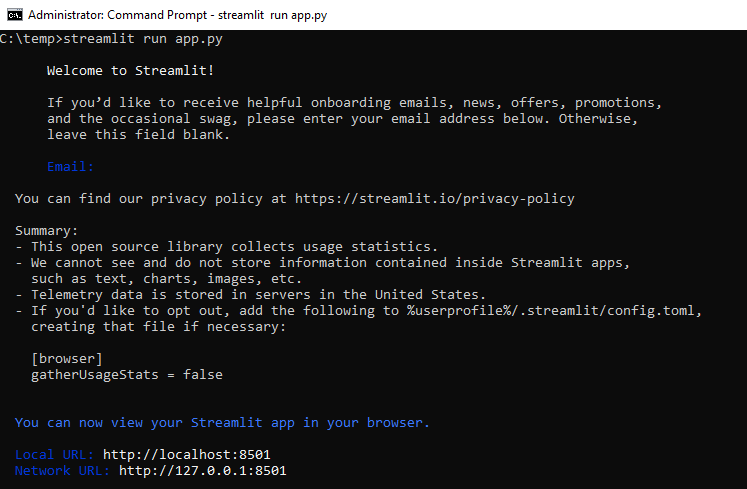

You can install streamlit via pip:

Once streamlit is installed, let us create a very basic web app.

As the first step, let us create a new Python script. Let us call it app.py.

Open app.py in your favourite IDE or text editor, then add these lines:

import streamlit as st

st.title("I love TM1py")

With that in place, we can run Streamlit from the command line:

https://en.wikipedia.org/wiki/Air_gap_(networking)

https://www.learnpython.org/en/Dictionaries