Product:

Microsoft Azure Database SQL

Issue:

When running a Store Procedure (SP) you get an error after a long time,

Sql error number: 40544. Error Message: The database ‘tempdb’ has reached its size quota.

Suggested solution:

Increase, if possible, your DTU, this will reset the tempdb and you will free space to start over.

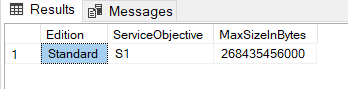

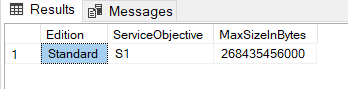

In SSMS check what SQL Azure level you are with:

SELECT Edition = DATABASEPROPERTYEX('databasename', 'EDITION'),

ServiceObjective = DATABASEPROPERTYEX('databasename', 'ServiceObjective'),

MaxSizeInBytes = DATABASEPROPERTYEX('databasename', 'MaxSizeInBytes');

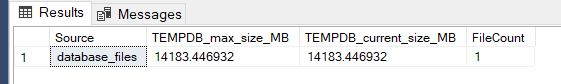

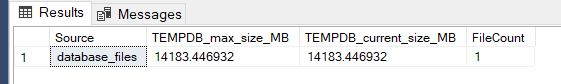

In SSMS enter to see the file size of TEMPDB at the moment:

SELECT [Source] = 'database_files',

[TEMPDB_max_size_MB] = SUM(max_size) * 8 / 1027.0,

[TEMPDB_current_size_MB] = SUM(size) * 8 / 1027.0,

[FileCount] = COUNT(FILE_ID)

FROM tempdb.sys.database_files

WHERE type = 0 --ROWS

Above we used up the file size limit of 13.9 GB for TEMPDB that exist in the first tiers. But with below SQL query can you see how much space is used inside:

SELECT

(SUM(unallocated_extent_page_count)*1.0/128) AS [Free space(MB)]

,(SUM(version_store_reserved_page_count)*1.0/128) AS [Used Space by VersionStore(MB)]

,(SUM(internal_object_reserved_page_count)*1.0/128) AS [Used Space by InternalObjects(MB)]

,(SUM(user_object_reserved_page_count)*1.0/128) AS [Used Space by UserObjects(MB)]

FROM tempdb.sys.dm_db_file_space_usage;

| Service-level objective |

Maximum tempdb data file size (GB) |

Number of tempdb data files |

Maximum tempdb data size (GB) |

| Basic |

13.9 |

1 |

13.9 |

| S0 |

13.9 |

1 |

13.9 |

| S1 |

13.9 |

1 |

13.9 |

| S2 |

13.9 |

1 |

13.9 |

| S3 |

32 |

1 |

32 |

Recommended solution, is to check what is causing the creation of large use of TEMPDB space, by check your query plans in SSMS.

Then improve you table columns format, to only what you need. Use nvarchar(50) instead of nvarchar(max) etc.

Check you store procedures query’s, and insert a index on the columns that you thing will make the selection smaller fastest.

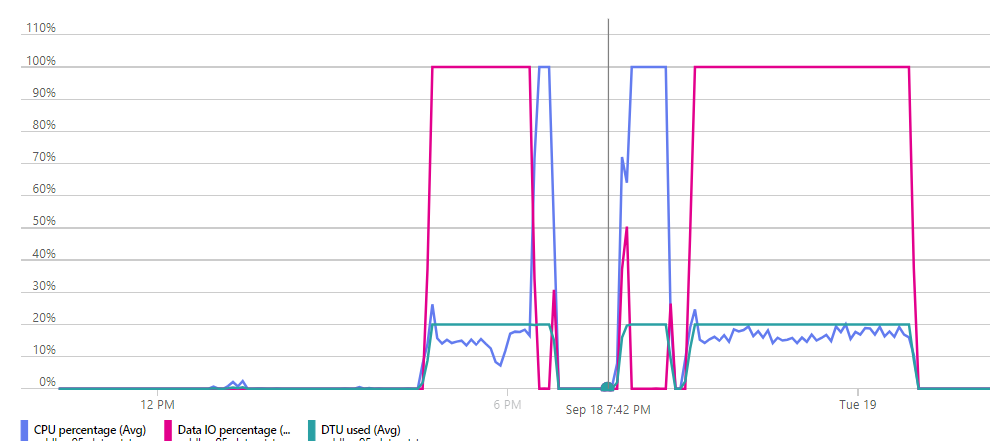

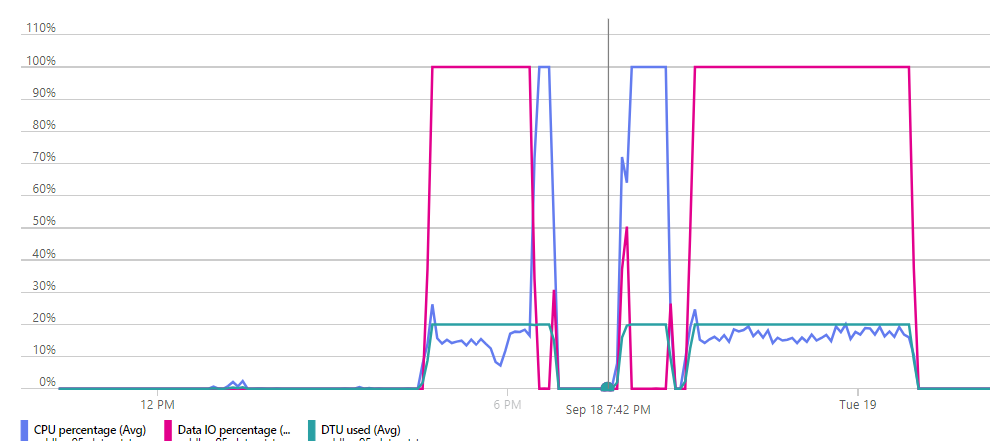

DTU in azure is a combination of CPU usage per second and read/write I/O per second to disc. When you have used up your quota, there is a limit on how much bytes you can write to disc per second, so your process will succeed but it will take much longer as a small amount of data is processed at each second.

A database transaction unit (DTU) represents a blended measure of CPU, memory, reads, and writes. Service tiers in the DTU-based purchasing model are differentiated by a range of compute sizes with a fixed amount of included storage, fixed retention period for backups, and fixed price.

https://blog.atwork.at/post/Azure-Subscription-and-Service-Limits

https://www.spotlightcloud.io/blog/what-is-dtu-in-azure-sql-database-and-how-much-do-we-need

More information:

https://knowledge-base.havit.eu/2018/02/19/azure-sql-the-database-tempdb-has-reached-its-size-quota-partition-or-delete-data-drop-indexes-or-consult-the-documentation-for-possible-resolutions-microsoft-sql-server-error-40544/

https://techcommunity.microsoft.com/t5/azure-database-support-blog/resolve-tempdb-related-errors-in-azure-sql-database/ba-p/3597944

https://sqlcoffee.com/Azure_0013.htm

https://www.brentozar.com/archive/2018/02/memory-grants-sql-servers-public-toilet/

Top five considerations for SQL Server index design

https://learn.microsoft.com/en-us/azure/azure-sql/database/resource-limits-dtu-single-databases?view=azuresql#tempdb-sizes

https://www.brentozar.com/archive/2023/09/oops-i-lost-my-indexes-in-azure-sql-db/

https://www.sqlshack.com/sql-index-overview-and-strategy/

https://learn.microsoft.com/en-us/sql/relational-databases/sql-server-index-design-guide?view=sql-server-ver16

https://datasimantics.com/2018/08/24/sql-servers-nvarcharmax-and-how-to-wield-it/

https://www.ibm.com/support/pages/only-first-1024-characters-nvarcharmax-column-are-presented-report-based-dqm-package

https://learn.microsoft.com/en-us/azure/azure-sql/database/service-tiers-dtu?view=azuresql

https://learn.microsoft.com/en-us/azure/azure-sql/database/resource-limits-dtu-single-databases?view=azuresql

To see sessions that use TEMPDB:

-- Sessions with open transactions in tempdb

SELECT [Source] = 'database_transactions',

[session_id] = ST.session_id,

[transaction_id] = ST.transaction_id,

[login_name] = S.login_name,

[database_id] = S.database_id,

[program_name] = S.program_name,

[host_name] = S.host_name,

[database_id] = DT.database_id,

[database_name] = CASE

WHEN D.name IS NULL AND DT.database_id = 2 THEN 'TEMPDB'

ELSE D.name

END,

[log_reuse_wait_desc] = D.log_reuse_wait_desc,

[database_transaction_log_used_Kb] = CONVERT(numeric(18,2), DT.database_transaction_log_bytes_used / 1024.0 ),

[database_transaction_begin_time] = DT.database_transaction_begin_time,

[transaction_type_desc] = CASE DT.database_transaction_type

WHEN 1 THEN 'Read/write transaction'

WHEN 2 THEN 'Read-only transaction'

WHEN 3 THEN 'System transaction'

WHEN 4 THEN 'Distributed transaction'

END,

[transaction_state_desc] = CASE DT.database_transaction_state

WHEN 1 THEN 'The transaction has not been initialized.'

WHEN 2 THEN 'The transaction is active'

WHEN 3 THEN 'The transaction has been initialized but has not generated any log records.'

WHEN 4 THEN 'The transaction has generated log records.'

WHEN 5 THEN 'The transaction has been prepared.'

WHEN 10 THEN 'The transaction has been committed.'

WHEN 11 THEN 'The transaction has been rolled back.'

WHEN 12 THEN 'The transaction is being committed. (The log record is being generated, but has not been materialized or persisted.)'

END,

[active_transaction_type_desc] = CASE AT.transaction_type

WHEN 1 THEN 'Read/write transaction'

WHEN 2 THEN 'Read-only transaction'

WHEN 3 THEN 'System transaction'

WHEN 4 THEN 'Distributed transaction'

END,

[active_transaction_state_desc] = CASE AT.transaction_state

WHEN 0 THEN 'The transaction has not been completely initialized yet.'

WHEN 1 THEN 'The transaction has been initialized but has not started.'

WHEN 2 THEN 'The transaction is active'

WHEN 3 THEN 'The transaction has ended. This is used for read-only transactions.'

WHEN 4 THEN 'The commit process has been initiated on the distributed transaction.'

WHEN 5 THEN 'The transaction is in a prepared state and waiting resolution.'

WHEN 6 THEN 'The transaction has been committed.'

WHEN 7 THEN 'The transaction is being rolled back.'

WHEN 8 THEN 'The transaction has been rolled back.'

END

FROM sys.dm_tran_database_transactions DT

INNER JOIN sys.dm_tran_session_transactions ST ON DT.transaction_id = ST.transaction_id

INNER JOIN sys.dm_tran_active_transactions AT ON DT.transaction_id = AT.transaction_id

INNER JOIN sys.dm_exec_sessions S ON ST.session_id = S.session_id

LEFT JOIN sys.databases D ON DT.database_id = D.database_id

WHERE DT.database_id = 2 -- tempdb

ORDER BY ST.session_id, DT.database_id;