Product:

PowerBI Portal service

Problem:

How to import a csv file to dataflow from sharepoint area.

If you use sharepoint connection, you may get error like this;

an exception occurred: DataSource.Error: Microsoft.Mashup.Engine1.Library.Resources.HttpResource: Request failed:

OData Version: 3 and 4, Error: The remote server returned an error: (404) Not Found. (Not Found)

OData Version: 4, Error: The remote server returned an error: (404) Not Found. (Not Found)

Solution:

There exist different solutions to this issue, they way that work for you can depend on how your company have set up the security.

Go to your power bi portal https://app.powerbi.com/home?experience=power-bi

Open up your workspace area where you have administrator rights.

Click NEW DATAFLOW

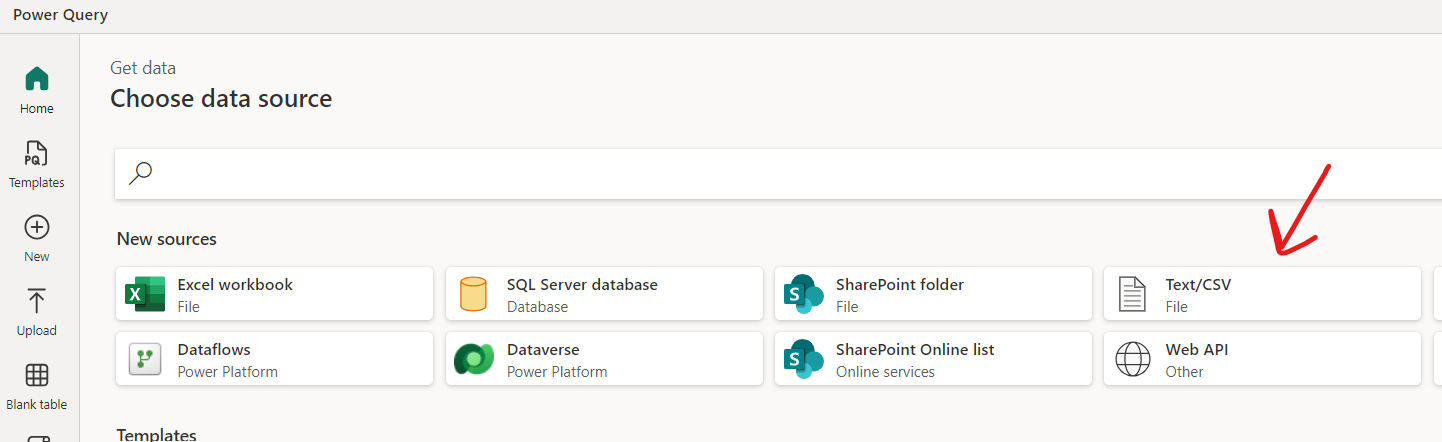

Click Add new tables

Select csv file, not SharePoint file.

From you SharePoint folder, where you have stored your file, copy the link.

Then edit the link in notepad, so you remove /:x:/r/ between .sharepoint.com/ and /teams, also remove all garbage after ?.

Then you get a “clean” url path that will work. Like this (replace with your company info):

https://company.sharepoint.com/teams/powerbiworkspacename/foldername/General/enkel.csv

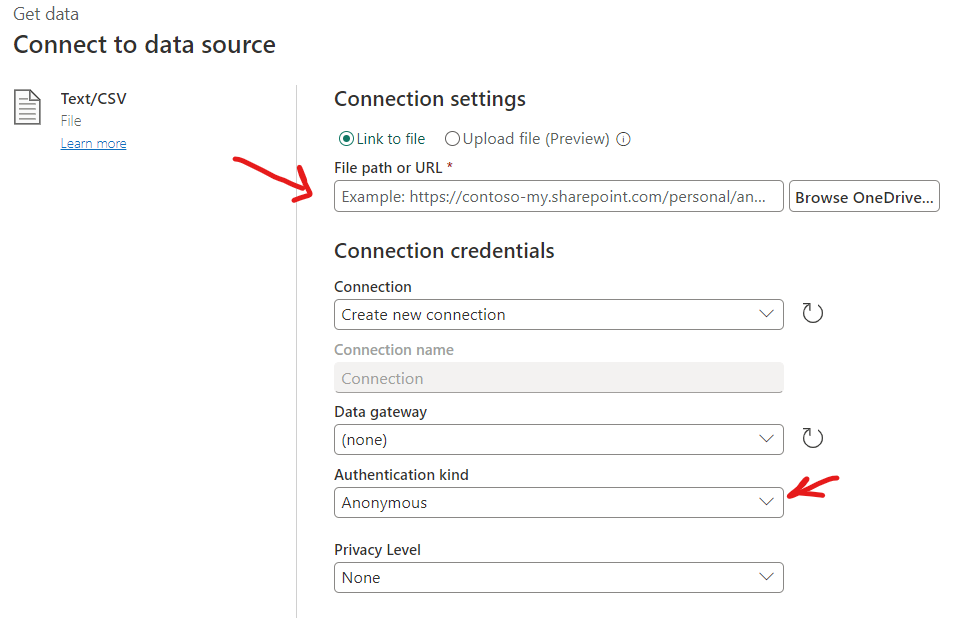

Paste the adjusted url to link to file field.

You may need to select data gateway to be “none”.

Enter “Organizational account” at authentication kind, if your SharePoint are part of you company, you will be prompted with your company azure login. If you are not already logged in to azure in your web browser.

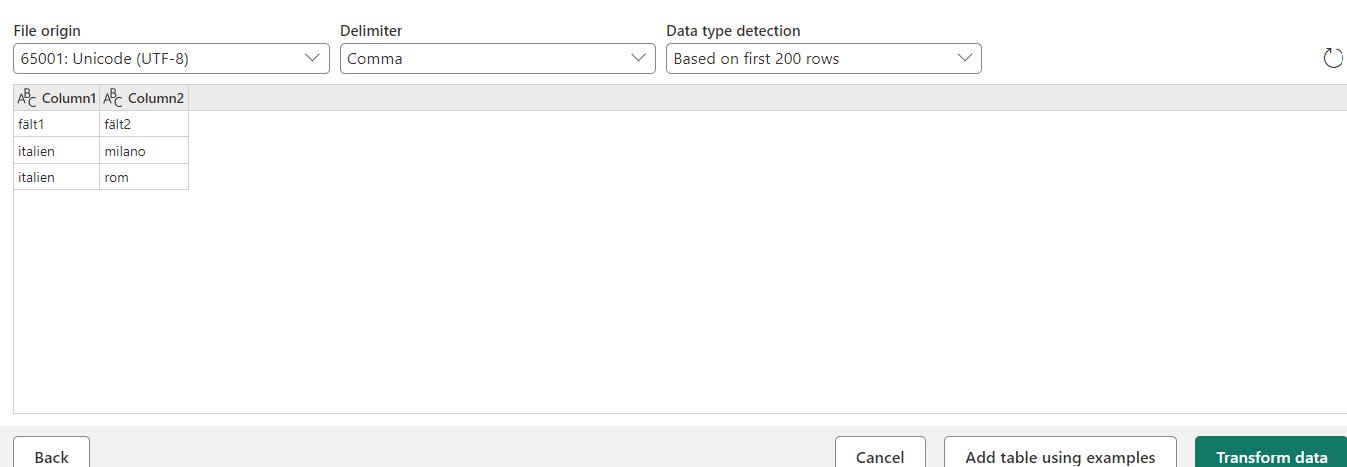

If all works, you get a preview of the file. Change the file origin to ensure that the special characters are handled correctly in the file. UNICODE-7 (utf-7) will support Swedish characters.

Click on transform data.

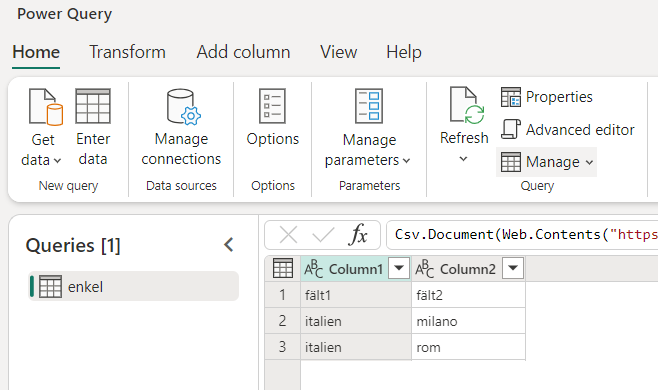

You will now have a similar look to power-bi desktop transform, where you can change the data before it is loaded into the cache.

The code is similar to below:

More Information:

https://www.linkedin.com/pulse/analytics-tips-connecting-data-from-sharepoint-folder-diane-zhu

https://learn.microsoft.com/en-us/power-query/connectors/sharepoint-folder

https://learn.microsoft.com/en-us/power-bi/transform-model/dataflows/dataflows-create

https://www.phdata.io/blog/how-and-when-to-use-dataflows-in-power-bi/

Dataflow Gen2 (fabric) is indeed an enhancement over the original Dataflow. One of the key improvements is the ability to separate your Extract, Transform, Load (ETL) logic from the destination storage, providing more flexibility. Gen2 also comes with a more streamlined authoring experience and improved performance.

For a more detailed comparison, you can refer to this link:

Differences between Dataflow Gen1 and Dataflow Gen2 – Microsoft Fabric | Microsoft Learn

Datamart primarily utilizes data streaming technology to import data into Azure SQL Server. Datamart then automatically generates and links datasets. You can then actually create data streams that connect to the Datamart, which can be used for DirectQuery or import if the Advanced Compute Engine is enabled.

For a more detailed comparison, you can refer to this link: