Product:

Planning Analytics 2.0.9.19

Microsoft Windows 2019 server

Issue:

I would like to turn of the DEV Tm1 servers during the weekend, can i do it with a chore?

Solution:

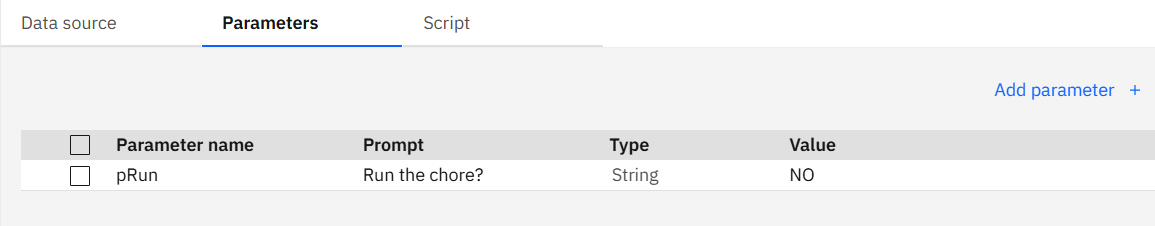

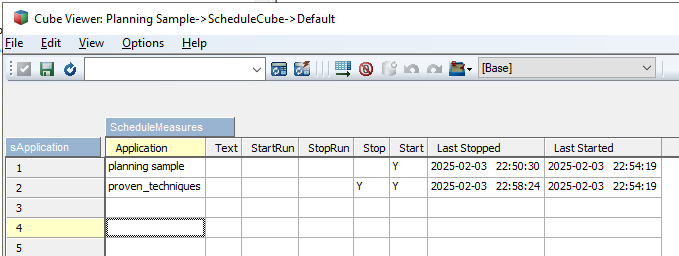

Create a parameter cube, something like below, add the tm1 instance name in the application column. Set a Y in Stop column if you would like the chore to stop the service. In the last two columns, we update the time when it was stopped or started.

# -- setup the parameter cube -- Cube = 'ScheduleCube' ; DimName1 = 'sApplication' ; DimName2 = 'ScheduleMeasures' ; sLoadtestdata = 'NO' ; # sLoadtestdata = 'YES' ; DimensionDestroy (DimName1 ) ; DimensionCreate (DimName1 ) ; # -- add elements nNum = 1; While( nNum <= 15 ); sNum = numbertostring (nNum) ; DimensionElementInsertDirect(DimName1 ,'', sNum , 'N' ) ; nNum = nNum + 1; End; DimensionDestroy (DimName2); DimensionCreate (DimName2 ); # -- add elements DimensionElementInsertDirect (DimName2 ,'', 'Application' , 'S' ) ; DimensionElementInsertDirect (DimName2 ,'', 'Text' , 'S' ) ; DimensionElementInsertDirect (DimName2 ,'', 'StartRun' , 'S' ) ; DimensionElementInsertDirect (DimName2 ,'', 'StopRun' , 'S' ) ; DimensionElementInsertDirect (DimName2 ,'', 'Stop' , 'S' ) ; DimensionElementInsertDirect (DimName2 ,'', 'Start' , 'S' ) ; DimensionElementInsertDirect (DimName2 ,'', 'Last Stopped' , 'S' ) ; DimensionElementInsertDirect (DimName2 ,'', 'Last Started' , 'S' ) ; # -- create a cube CubeCreate(Cube, DimName1 , DimName2 ); # -- add data to cube -- IF ( sLoadtestdata @= 'YES' ) ; cellputs ( 'planning sample' , Cube , '1' , 'application' ) ; cellputs ( 'Y' , Cube , '1' , 'Start' ) ; cellputs ( 'Y' , Cube , '1' , 'Stop' ) ; cellputs ( 'proven_techniques' , Cube , '2' , 'application' ) ; # -- add your default test data here -- ENDIF;

Create then a TM1 TI process to stop services:

# --- stop a service --

Cube = 'ScheduleCube' ;

DimName1 = 'sApplication' ;

DimName2 = 'ScheduleMeasures' ;

# -- check number of elements --

nLong = DIMSIZ (DimName1) ;

nNum = 1 ;

WHILE ( nNum <= nLong ) ;

sNum = numbertostring (nNum) ;

# -- get the application name --

sApp = CELLGETS ( cube , sNum, 'Application' ) ;

# -- check that it is not empty --

IF (LONG (sApp) <> 0 );

# -- check that it is suppose to be stopped --

IF ('Y' @= CELLGETS ( cube, sNum, 'Stop' ) ) ;

# -- get the time and put in the cube --

sTime = TIMST(now, '\Y-\m-\d \h:\i:\s');

CELLPUTS ( sTime, cube, sNum, 'Last Stopped' ) ;

# -- make the call to stop --

sBatchFile = 'NET STOP "' | sApp |'"' ;

ExecuteCommand('cmd /c ' | sBatchFile, 0);

ENDIF;

ENDIF;

nNum = nNum +1 ;

END;

Create a TM1 TI process to start services:

# -- start a service --

Cube = 'ScheduleCube' ;

DimName1 = 'sApplication' ;

DimName2 = 'ScheduleMeasures' ;

# -- check number of elements --

nLong = DIMSIZ (DimName1) ;

nNum = 1 ;

WHILE ( nNum <= nLong ) ;

sNum = numbertostring (nNum) ;

# -- get the application name --

sApp = CELLGETS ( cube , sNum, 'Application' ) ;

# -- check that it is not empty

IF (LONG (sApp) <> 0 );

# check that it is suppose to be started --

IF ('Y' @= CELLGETS ( cube, sNum, 'Start' ) ) ;

# -- get the time and put in the cube --

sTime = TIMST(now, '\Y-\m-\d \h:\i:\s');

CELLPUTS ( sTime, cube, sNum, 'Last Started' ) ;

# -- make the call to start --

sBatchFile = 'NET START "' | sApp |'"' ;

ExecuteCommand('cmd /c ' | sBatchFile, 0);

ENDIF;

ENDIF;

nNum = nNum +1 ;

END;

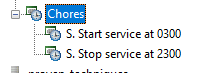

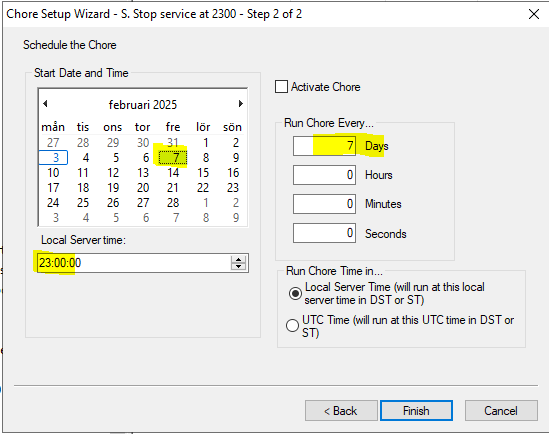

Schedule them in cores, to run on Friday at 2300 and on Monday at 0300 – to get it to stop and start the service.

I recommend that you have a “savedataall” in the TM1 applications that run every Friday before above.

Set the chore to start on the weekday it should stop the service, and set it to run the chore every 7 days.

More Information about other things:

https://www.ibm.com/docs/en/planning-analytics/2.0.0?topic=smtf-savedataall

https://www.mci.co.za/business-performance-management/learning-mdx-views-in-ibm-planning-analytics/

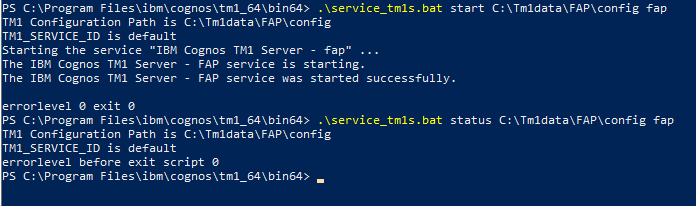

Here we start TM1 instance FAP, in folder c:\tm1data\FAP\config.

Here we start TM1 instance FAP, in folder c:\tm1data\FAP\config.